This month’s search roundup dives into the biggest developments shaping the SEO landscape. From a recent surge in ranking volatility throughout April and new experiments in AI-powered search, to practical updates for ecommerce and local SEO, we’ll discuss it all below.

Throughout April, several instances of ranking volatility were noted. SEO monitoring tools all recorded large movements on the 22nd, 23rd and 25th of April, which has prompted many to question whether a new Google algorithm update is on the horizon.

In other news, Google has been testing the integration of AI Overviews into local search results, experimenting with new formats that could replace or reposition the traditional local pack and knowledge panel.

Google has also rolled out a new Merchant Opportunities report in Search Console. Merchants connected via Google Merchant Center will now see practical suggestions to improve product listings, such as adding shipping info, store ratings, or payment options.

Finally, in a couple of interviews with representatives from Google, we have also gained more insight into the newly emerging llms.txt markdown-based files and their stance on Server-Side vs. Client-Side Rendering. We’ll explore these updates in more detail in the article below.

Allow our traffic light system to guide you to the articles that need your attention, so watch out for Red light updates as they’re major changes that will need you to take action, whereas amber updates may make you think and are definitely worth knowing, but aren’t urgent. And finally, green light updates, which are great for your SEO and site knowledge, but are less significant than others.

Keen to know more about any of these changes and what they mean for your SEO? Get in touch or visit our SEO agency page to find out how we can help.

- Google search ranking volatility spiked in April

- Is AI Overviews going to replace Local Search?

- Google Search Console updates its Merchant Opportunities report

- How to track traffic from AI Overviews, Featured Snippets, or People Also Ask results in GA4

- How to rank product pages in Google’s new product SERPs

- Server-Side vs. Client-Side Rendering: What Google recommends

- Google says llms.txt is comparable to the keywords Meta Tag

On April 25, 2025, a significant spike in Google Search ranking volatility was observed, marking the second occurrence within the same week. This followed earlier fluctuations on April 22 and 23 and previous disturbances on April 16 and April 9. Despite these repeated shifts, Google has not officially confirmed any updates beyond the March 2025 core update.

Multiple SEO tracking tools, including Semrush, Mozcast, SimilarWeb, and Sistrix, reported notable surges in volatility on April 25. These spikes suggest substantial changes in search rankings across various websites.

The SEO community has echoed these findings, with webmasters reporting significant declines in traffic. Some noted drops of up to 70%, particularly affecting Google Discover traffic. Others observed their websites disappearing from search results or experiencing erratic visibility. These disruptions have led to widespread frustration, with some users expressing concerns over the increasing dominance of ads and perceived decline in search result quality.

While the exact cause of this volatility remains unclear, the frequency and intensity of these fluctuations suggest ongoing, unannounced adjustments to Google’s search algorithms.

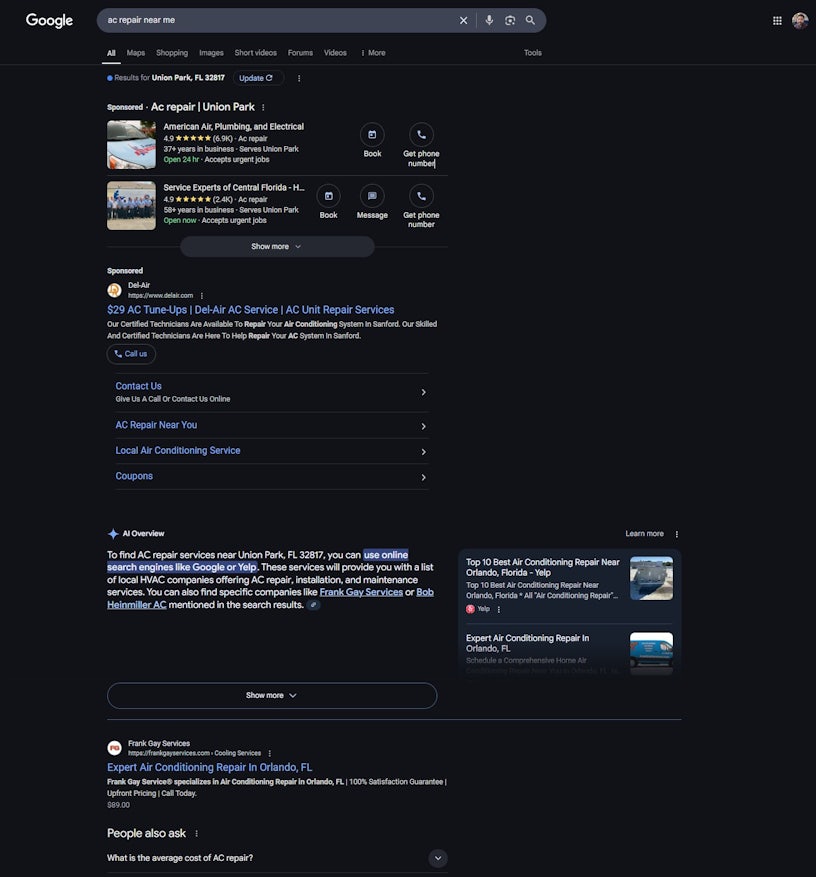

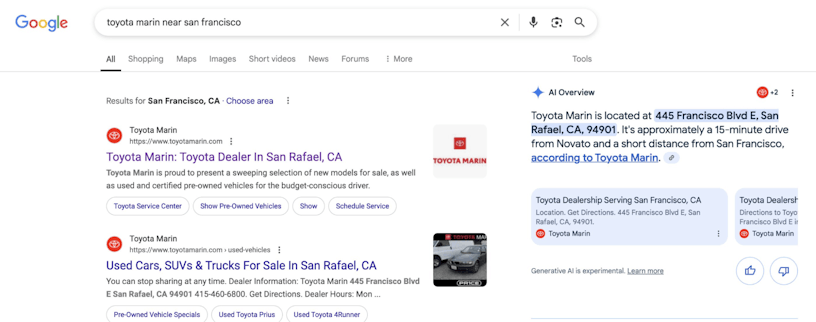

In early May 2025, Google initiated tests integrating AI Overviews into local search results, potentially altering the traditional presentation of local business information. One experiment involves replacing the familiar “local pack”, which typically displays a map and a trio of business listings for “near me” queries, with AI-generated summaries incorporating local details.

Another test positions AI Overviews on the right-hand side of the search results page, a space traditionally reserved for the local knowledge panel. This layout change indicates Google’s exploration of new formats for presenting AI-generated content alongside standard search results.

These developments are part of Google’s broader strategy to integrate AI Overviews into its search platform. Initially introduced in the U.S. in May 2024 and expanded to over 100 countries, including the U.K., AI Overviews provide concise, AI-generated summaries in response to user queries. While designed to enhance user experience by delivering quick information, the feature has faced criticism for potential inaccuracies and its impact on website traffic, as users may rely on summaries instead of visiting source sites.

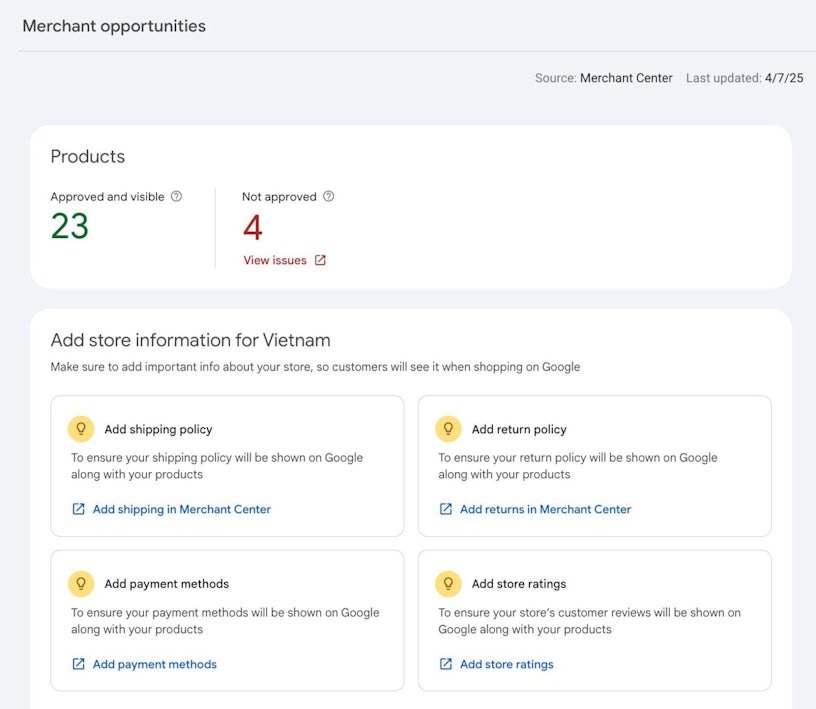

Google has announced the new Merchant Opportunities report within Google Search Console, which can show you recommendations for improving how your online shop appears on Google.

If you’ve created and associated your Merchant Center account under Merchant opportunities in Search Console, you’ll see suggested opportunities, including:

- Add shipping and returns

- Set up store ratings

- Add payment methods

You can return to the report to see if your information is pending, approved, or flagged for issues that need fixing.

This report helps you review and see what opportunities you are missing with your e-commerce site setup.

As Google continues evolving its Search interface, tracking organic traffic from newer search features, like AI Overviews (AIO), Featured Snippets, and People Also Ask (PAA), has become increasingly important for SEO professionals. These features often deliver users directly to a specific section of a web page using URL fragments, such as #:~:text=, highlighting the relevant text. However, traditional analytics tools, including Google Analytics 4 (GA4), do not automatically register or segment this kind of traffic.

To capture this data, businesses need to implement custom JavaScript variables in Google Tag Manager to extract the highlighted text from the URL. Then, send this information to GA4 using custom dimensions. This setup allows for the analysis of user interactions originating from these SERP features, providing insights into how users engage with highlighted content on your site. However, not all clicks from these features include identifiable URL fragments, so tracking may not capture every instance.

KP Playbook offers a practical guide to bridge this gap using Google Tag Manager (GTM) and GA4 custom dimensions.

How to rank product pages in Google’s new product SERPs

In a recent article published on Sitebulb, Angie Perperidou shared her 7 strategies to optimise for product SERPs in 2025. This advice was provided in light of the considerable changes we have seen in Google’s search results as we shift away from traditional listings of category pages to more detailed product information directly in the search results.

What’s changed?

Features like AI Overviews, image grids, and product carousels now give users access to images, prices, and reviews without needing to visit a website. As a result, product pages aren’t just competing with other ecommerce stores, they’re now competing with Google’s own interface. If a page lacks structured data, unique content, or visual appeal, it might not show up in search at all.

What e-commerce businesses can do to adapt

In the guide, Angie stresses that ecommerce businesses need to rethink how they optimise their product pages. She provided the following advice:

- Implementing product-related schema markup (like Product, Offer, and Review) so Google can properly index and display your content in rich results.

- Target long-tail search queries that are more specific and less competitive to drive niche traffic and improve visibility.

- Avoid regurgitating manufacturer descriptions and create unique copy that answers customer needs and differentiates your offering.

- Add FAQs marked up with the appropriate schema directly on product pages. Tools like AlsoAsked, Reddit, Quora, and Google Search Console can help you discover what users are asking.

- Optimise product images and videos with descriptive filenames and alt text to help pages appear in image or video carousels whilst compressing files to improve page load speed (especially important for mobile users).

- Encourage and display customer reviews to build credibility and increase your chances of capturing rich search results to help increase click-through rates.

- Pay attention to mobile optimisation to ensure that pages need to load quickly and function well across all devices.

If you have an ecommerce website and need help from an experienced SEO ecommerce agency, we can help. Just visit our ecommerce SEO services page for more information.

Server-Side vs. Client-Side Rendering: What Google recommends

In a recent interview with Kenichi Suzuki of Faber Company Inc., Martin Splitt, a Developer Advocate at Google, provided new insights into how Google handles JavaScript rendering, especially in light of AI tools like Gemini.

Splitt explained that both Googlebot and Gemini’s AI crawler use a shared Web Rendering Service (WRS) to process JavaScript content. This makes Google’s systems more efficient at rendering complex sites compared to other AI systems that may struggle with JavaScript.

While some reports have suggested rendering delays can take weeks, Splitt clarified that rendering is typically completed within minutes, with long waits being rare exceptions likely due to measurement issues.

Should you use Server-Side or Client-Side Rendering?

Splitt addressed the common SEO debate between server-side rendering (SSR) and client-side rendering (CSR), noting that there’s no one-size-fits-all answer. The best approach depends on your website’s purpose. For traditional, content-focused sites, relying on JavaScript can be a disadvantage as it introduces technical risks, slows down performance, and drains mobile batteries. In such cases, Splitt recommends SSR or pre-rendering static HTML.

However, CSR remains useful for dynamic, highly interactive applications like design tools or video editing software. Rather than choosing one rendering method, he advises thinking of SSR and CSR as different tools, each appropriate for specific use cases.

Structured data is helpful, but not a ranking factor

The discussion also covered the growing importance of structured data in AI-driven search environments. Splitt confirmed that structured data plays a valuable role in helping Google understand website content more confidently. This added context is especially beneficial as AI becomes more embedded in search functions. However, he emphasised that while structured data improves understanding, it does not directly influence a page’s ranking in search results.

The focus should always be on users and producing quality content

Splitt concluded with a reminder that, despite all the technical options available, the fundamentals of SEO haven’t changed. He urged site owners and marketers to prioritise user experience, align their websites with clear business goals, and focus on delivering high-quality, helpful content. As AI continues to reshape the way search engines interpret and deliver information, staying grounded in user needs remains the most effective strategy.

In March’s Google algorithm blog, we introduced the new concept of llms.txt, which aims to help AI crawlers access a simplified version of a website’s content.

Often mistaken for robots.txt, llms.txt aims to highlight only the main content of a page using markdown to make it easier for LLMs to process the content more efficiently.

However, the llms.txt file is still just a proposal, not a widely adopted standard, and it has yet to gain serious traction among AI services.

What is Google’s take?

John Mueller from Google weighed in on a Reddit thread where someone asked if anyone had seen signs that AI crawlers were using llms.txt. Mueller responded that, as far as he knows, no major AI service is using or even looking for llms.txt files, comparing its usefulness to the now-obsolete keywords meta tag.

He went on to question the point of using llms.txt when AI agents already crawl the actual site content, which provides a fuller, more trustworthy picture. In his view, asking bots to rely on a separate, simplified file is redundant and untrustworthy, especially since structured data already serves a similar, more credible purpose.

llms.txt sparks concern about cloaking and abuse

A major issue with llms.txt is the potential for abuse. Site owners could show one version of content to LLMs through the markdown file and a completely different version to users or search engines, similar to cloaking, which violates search engine guidelines. This makes it a poor solution for standardisation, especially in an age where AI and search engines rely on more sophisticated content analysis rather than trusting publisher-declared intent.

llms.txt files have little to no impact at the moment

In the same Reddit discussion, someone managing over 20,000 domains noted that no major AI bots are accessing llms.txt. Even after implementation, site owners observed no meaningful impact on traffic or bot activity.

This sentiment was echoed in a follow-up LinkedIn post by Simone De Palma, who originally started the discussion. He argued that llms.txt may even hurt the user experience, since content citations might lead users to a plain markdown file instead of a rich, navigable webpage. Others agreed, pointing out that there are better ways to spend SEO and development time, such as improving structured data, robots.txt rules, and sitemaps.

Should you utilise llms.txt files?

According to both Google and the SEO community, llms.txt is currently ineffective, unsupported, and potentially problematic. It does not control bots like robots.txt, nor does it provide any measurable SEO benefit. Instead, it has been recommended that site owners focus on established tools like structured data, proper crawling directives, and high-quality content.