Here at Impression, we strive to ensure that the websites we work on are as easy as possible for search engine bots to crawl and subsequently discover our clients’ most valuable content, or the content which we intended to be indexed.

As part of our technical SEO auditing process, we review elements of a given website which are possibly giving search engine crawlers are a hard time and therefore impacting on the overall crawl efficiency and therefore crawl budget allocated to the site.

Check out our technical SEO agency page to discover more about our work.

What is crawl budget?

Google defines crawl budget as “the number of URLs Googlebot can and wants to crawl”. However, there are also a couple of other factors to consider before crawl budget is truly defined.

The first of these factors is the “crawl rate limit”, which ensures that Google crawls the pages at a rate which won’t be detrimental to the stability of a website’s server. The second is “crawl demand”, and this is how much Google wants to crawl your web pages – Google evaluate this based on “how popular pages are and how stable the content is in the Google index”

Particularly with larger websites, our aim is to provide the search engines crawler with the best experience possible, eliminate crawler traps and essentially create a more efficient crawling process.

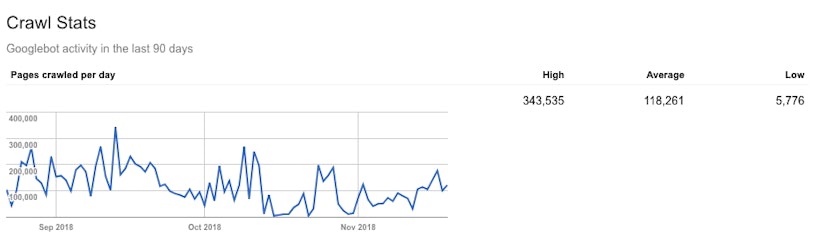

In order to estimate a sites crawl budget, the “crawl stats” report in Google Search Console provides a good starting point. This report provides Googlebot crawl activity over a 90 day period including the average number of pages crawled in that time frame along with high and low crawl numbers;

In the above example, by taking the daily average of 118,261 and multiplying this by 30 days, we can see that the average monthly crawl budget for this website is around 3,547,830 URLs. If you then compare this to the number of submitted URLs, you’ll get an idea of how sufficient the budget is.

Assessing crawl efficiency

The above approach, however, isn’t a conclusive measure of how efficiently crawl budget is being used – this is because this methodology assumes that every page you have submitted will be crawled each month, and also that the budget is only being consumed on the pages which you have submitted to Google.

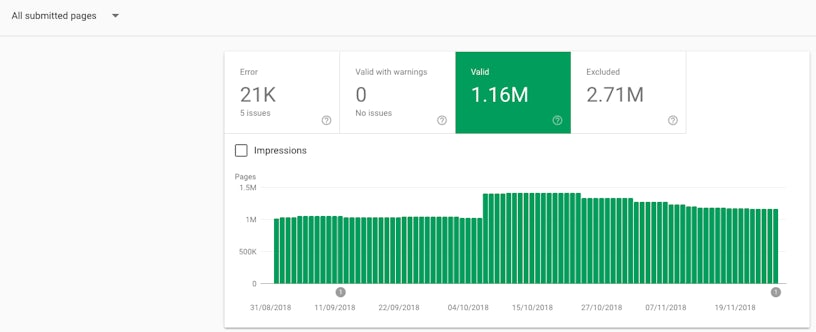

In order to begin assessing the crawl efficiency of a particular domain, head over to the coverage reports within Google Search console. Here we are able to get a better picture of how Google is crawling and indexing the pages we have submitted to them (via xml sitemaps);

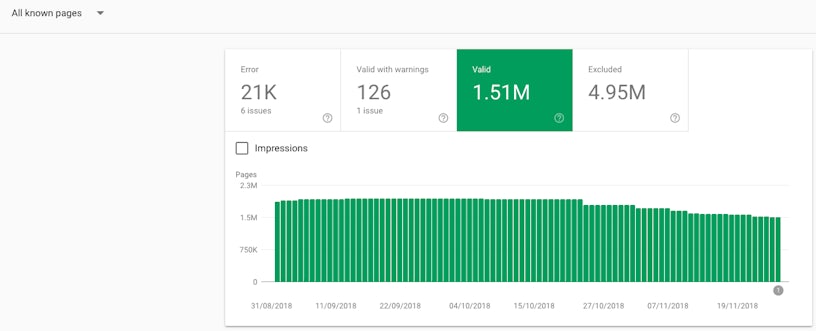

Along with the pages which Google “knows” about;

By reviewing the numerical difference between the known and submitted URLs, we are able to get a granular view of where Google is crawling URLs which are of low value, duplicates or potential crawler traps. In the above examples, we can see that the number of valid pages in the “known” report is higher than the number of valide pages in the submitted report, suggesting that Google has crawled and indexed pages which we don’t intend to be indxed.

Reviewing the individual reports and checking over errors, warnings and reasons for excluded pages is a good place to start when auditing the URLs which Google has encountered when crawling a website.

This will allow you to isolate issues, identify common pattersn and take appropriate action to improve crawl efficiency and eliminate errors which could be detrimental your overall SEO efforts, including duplicate and thin content.

What are crawler traps?

“Crawler traps” are areas within a website that contain structural issues and therefore have the potential to cause crawlers confusion. Some examples include;

- Infinite URLs due to coding errors

- Session ID’s

- Faceted navigations

- Search parameters

In the case of larger websites (where we have a massive number of pages that we wish to be indexed), we want to avoid or minimise these traps in order to give the crawler the best chance of reaching valuable pages which are deep inside the site architecture.

Overall objectives here are to:

- Increase the total average number of pages crawled per day (and as a result each 90 day period).

- Identify potential crawler traps within the website

- Ensure that pages adding little or no value via Google Search are de-prioritised over high-value pages.

- Bring the number of “known” pages closer in line with the number “submitted” pages

How to identify crawler traps and inefficiencies

The best place to start when attempting to improve crawl efficiency is by asking one simple question: “should this page be indexed in Google’s search results?”. To further qualify any URLs, you will need to look at the value they might add or specifically the purpose they serve.

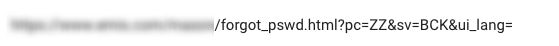

For example, is this page primarily for existing users who require the functionality to reset a password in order to access their account?;

Or, does the URL affect the way in which a user searches on the site, for example by filtering or sorting a particular page;

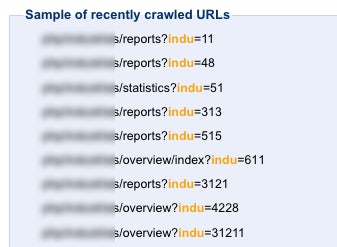

In addition, heading over the parameter handling tool within Search Console will provide further insight into the URLs which Google are “monitoring” and have therefore recently crawled;

We find it useful to export all of the parameters monitored into a spreadsheet and total up the number of monitored URLs. From here, you can gain further perspective on how crawl budget is potentially being wasted – in the case of this particular website there was a total of 1,188,702 monitored URLS which existed due to parameters.

When troubleshooting these issues, it’s often also a good idea to collaborate with web development teams as they will have a good understanding of how the site has been built and therefore how it functions. They will be able to advise on the purpose of the parameters and inform you of how they affect page content.

We’ve also encountered websites where attempts have been made to optimise crawl budget, specifically with the inclusion of specific directives within a robots.txt file. Although this is a common and often effective method, if not approached strategically and without a working knowledge of how the site functions, then URLs can still be discovered (and indexed) by search engine crawlers.

6 Steps to optimise crawl efficiency

If Google has already indexed pages on the site which are blocked via robots.txt then the first course of action is to remove the directives from the robots.txt file and allow these pages to be crawled. Although this sounds contradictory and counterproductive when considering crawl budget, it will allow the necessary steps to be taken in order to improve crawl efficiency long term.

We recommend the following steps as a general methodology when dealing with low value content and optimising for crawl budget:

1. Remove existing robots.txt directives (if they exist) to allow parameter pages to be crawled

2. Review parameter URL patterns and ensure “noindex” meta or x-robots http “noindex” directive is added to any pages which shouldn’t be in the index.

3. Specify a canonical URL across any relevant parameter pages (for example, when dealing with faceted navigation on product category pages).

3 Give search engines some time to re-crawl these pages and pick up on the requests and monitor for undesirable pages falling out of the index.

4. Use the URL parameter handling settings in Google Search Console to instruct Google not to crawl any parameter-based URLs which don’t add value.

5. Ensure a “nofollow” attribute is placed on any internal links which lead to filter/parameter based pages – to ensure that search engines can’t discover and crawl these pages via following internal links

6. As a final measure and once satisfied that correct handling is in place then re-introduce robots.txt directive blocking via a comprehensive and considered list of URL patterns.

In conclusion

The subject of crawl budget and optimising for crawl efficiency is one which has been widely documented within the search industry. The examples along with the methodology detailed here are specific to a particular set of challenges in relation to one domain. Each website (and particularly larger websites) will have its own set of challenges, for which unique or bespoke optimisation techniques may need to be applied. That said, the guidance detailed here outlines some of the steps which can be taken to a) assess if crawl budget is an issue and therefore requires attention b) steps to follow in order to make a website more accessible and efficient for website crawlers.