- What does ‘incrementality’ mean in advertising?

- What is incrementality testing?

- Incrementality testing example

- How contribution-based measurement compares to attribution-based measurement

- Triangulation in marketing

- When should you consider incrementality testing?

- Which digital advertising channels are well suited to incrementality testing?

- What if the insights from an incrementality test don’t align with insights from multi-touch attribution?

- How to calculate incrementality and lift

- Aims of incrementality testing

- Incrementality test designs

- Geo experiments

- User testing

- Product incrementality testing

- Synthetic controls

- Incrementality testing frameworks

- Deriving the control group(s)

- A/B testing

- Weighted averages

- Time series methods

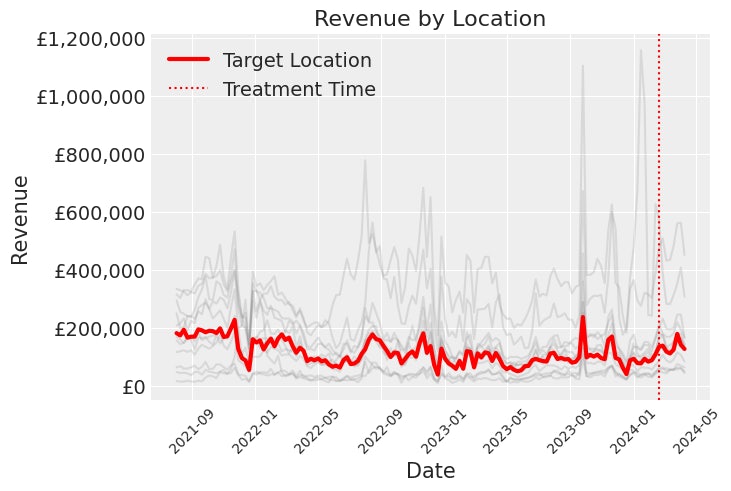

- Example of an incrementality test

- Background

- Methodology

- Pre-Treatment

- During treatment

- Post-treatment

- Results and interpretation

- Common considerations of geo-testing

- How to interpret the results of your incrementality test

- What if your channels are not incremental?

- Next steps if your channels are incremental?

- How long should an incrementality test last?

- How to overcome challenges with incrementality testing

- Hold out testing for the synthetic control

- How does incrementality testing complement Marketing Mix Modelling?

What does ‘incrementality’ mean in advertising?

Incrementality refers to the causal improvement in revenue, or the KPI of interest, from an isolated activity.

Incrementality testing aims to measure the additional sales generated exclusively as a result of the advertising efforts. Incrementality testing provides a more precise understanding of the direct impact of advertising efforts on driving consumer behaviour, allowing you to discover the true effectiveness of campaigns in driving desired outcomes.

In short, the outcomes from Incrementality testing can go a long way to helping you answer “where should I put my next pound/dollar of marketing budget to get the best returns”.

Incrementality is not the same as measurement methods which rely on digital, multi-touch attribution. Digital attribution typically claims credit for sales following any ‘touch-point’ in the customer’s journey, not just the sales which were truly motivated by the advertising exposure. Digital attribution does not measure incrementality, as digital attribution will also cover sales which would have happened anyway.

What is incrementality testing?

Incrementality testing refers to a method used to evaluate the true impact of specific marketing initiatives or channels on desired outcomes, such as sales or conversions, through controlled experimentation.

Unlike digital, multi-touch attribution, which assigns credit to multiple touchpoints along the customer journey, incrementality testing isolates the causal effect of a marketing intervention by comparing the behaviour of a treatment group against a control group.

- Treatment Group: Exposed to advertising, used to measure the causal impact of advertising.

- Control Group: Not exposed to advertising, serves as a baseline for comparison.

By measuring the differences in desired outcomes between the treatment and control groups, incrementality testing provides you with valuable insights into the true effectiveness of marketing strategies and helps optimise budget allocation for maximum ROI.

Incrementality testing can do this by starting, stopping, increasing or decreasing investment into a particular marketing activity for a measurable treatment group. For incremental marketing activities, even when the experiment is controlled for externalities, the total sales values in the treatment group should be affected by the change in marketing investments there.

If a change isn’t detectable, then the marketing investment is offering no incremental value. However, this doesn’t necessarily mean the channel or investment type is entirely ineffective – just the specific execution measured in the test.

Incrementality testing example

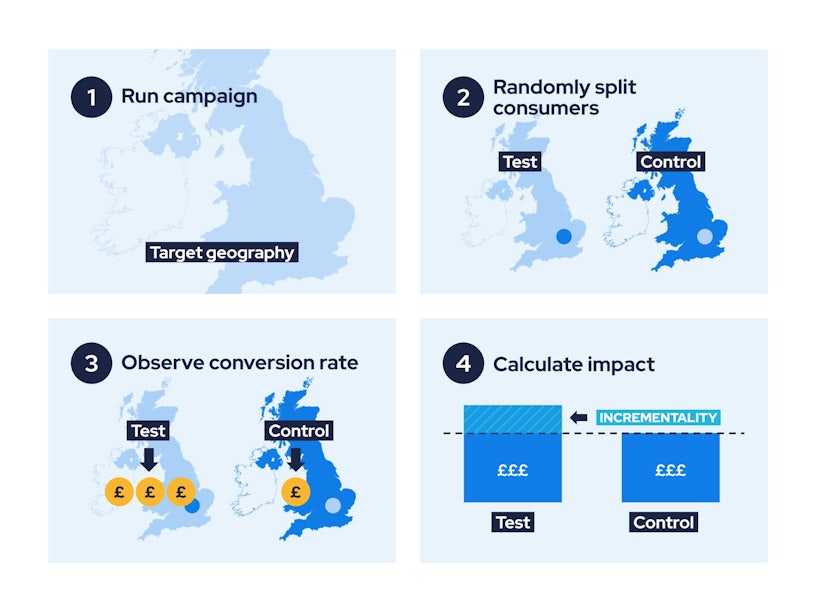

- Step 1: Select a market or region in which you’d like to examine the effectiveness of advertising. Call this the treatment group.

- Step 2: Select a market or region, or derive an artificial market or region, which serves as a baseline sales for comparison. Call this the control group.

- Step 3: Run ads in the treatment group only, ensuring that everything in the control group remains as constant as possible.

- Step 4: Once the test has finished, analyse the results and calculate the incremental uplift and its associated ROI.

How contribution-based measurement compares to attribution-based measurement

Contributed-based measurement refers to the impact made by advertising on the top line of the business’ results — on its revenue. Attribution-based measurement could measure plenty of sales, but unless these sales are net new to the business, they would make no additional contribution.

Understanding these differences in measurement is critical, as these can sometimes be confused. Incrementality testing (as well as marketing mix modelling for that matter) seeks to measure incremental improvements in contribution from marketing, for the business.

A contribution-based approach to measurement uses historical data, utilises statistical techniques and doesn’t require knowledge of the customer journey. An attribution-based approach tracks touchpoints in the customer journey from the point of viewing an ad and measures every sale associated with ad exposure.

To truly understand the impact of your marketing you need to understand the differences in the measurement techniques available to you, and the pros and cons of each method.

An attribution-based approach to measurement has two main flaws:

- Generosity: The first flaw is that it tends to measure marketing performance through a slightly generous lens. As this method measures all sales associated with ad exposure, it will also record those sales which would’ve happened anyway regardless of the user interaction with the ad. Additionally, if the user were to view the ad twice, using two different devices, an attribution-based approach would count this as two different user interactions, when in fact it was the same user. As a result of this, you might find that an attribution-based approach to measurement reports generously.

- Accuracy: The second flaw is that, with the depreciation of the third-party cookie, tracking touch points within the customer journey is becoming more difficult and less accurate.

Although an attribution-based approach to measurement has its flaws, it does provide user and campaign-level insights. User and campaign-level insights offer a granular understanding of your audience’s behaviour and the performance of individual marketing campaigns. By analysing user-level data, you can gain insights into customer preferences, behaviours, and purchase patterns, allowing for more personalised and targeted marketing efforts. Campaign-level insights also provide visibility into the effectiveness of specific marketing initiatives, helping you to identify which campaigns are driving the most engagement, conversions, and ROI.

Incrementality testing on the other hand adopts a contribution-based approach to measurement and avoids these flaws. The control group of the incrementality test is designed to act as a proxy for what would’ve happened to the treatment group in absence of the marketing effect.

As an incrementality test measures the difference between the treatment and control group, the sales which would’ve happened anyway are accounted for by the control group. Furthermore, incrementality testing doesn’t require knowledge of the customer journey because it measures the total revenue impact to the business, not web analytics software, and hence is not affected by the depreciation of the third-party cookie.

Although incrementality testing might seem like the solution to attribution-based measurement, an incrementality test is only as good as the control group used to mimic the treatment group in absence of the treatment.

Triangulation in marketing

Although both contribution-based and attribution-based approaches aim to measure the performance of your marketing, they do so in different ways. This makes comparison of the two methods impractical. Instead of comparing these methods, you may gain more insights into your marketing performance, or at least be able to remain agile in your media buying, by combining them.

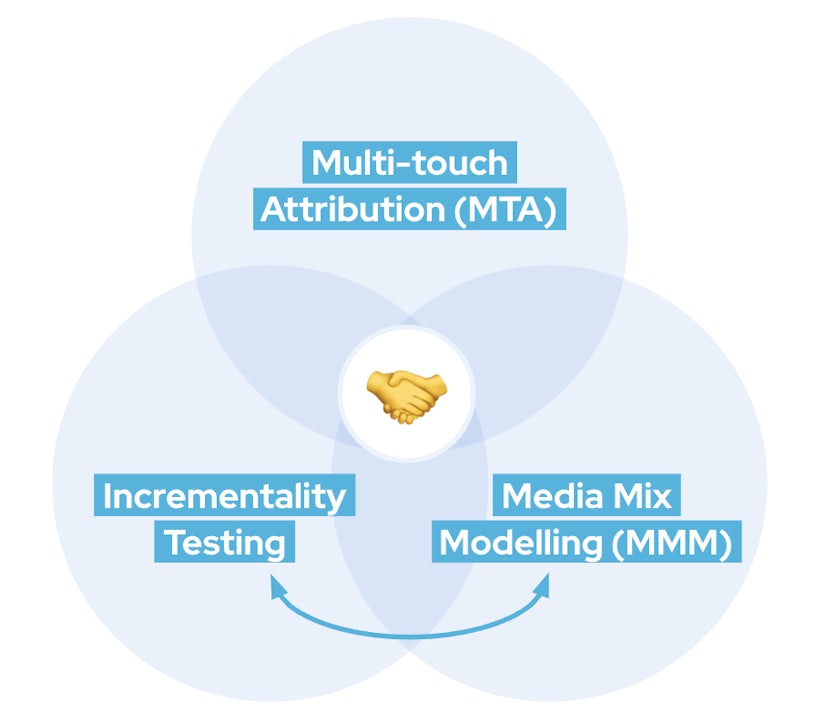

Triangulation in marketing simply refers to the process of gaining insights into marketing performance from the three most popular techniques used in media effectiveness measurement. The aim of triangulation is to extract insights from all sources and combine them to estimate the “true” performance of your marketing. The three most popular sources in marketing measurement are multi-touch attribution (MTA), marketing mix modelling (MMM) and incrementality testing, and each are important as they offer differing levels of insights which are useful at different time intervals.

Multi-touch attribution (MTA) focuses on allocating credit to various touch points along the customer journey to understand how each touch point attributes to sales or conversions, and often reports on this in near-real-time. MMM, on the other hand, uses statistical modelling to analyse historical data and quantify the impact of different marketing channels on business outcomes. MMM is often run periodically, on refresh intervals which can range between weeks and months. Incrementality testing involves conducting experiments where a subset of the target audience is exposed to a marketing intervention, while another subset serves as a control group. Incrementality testing, and testing in general should really be an ongoing programme of work in a business, but each campaign or platform might only be tested periodically also.

Triangulation is really a new industry term, which acknowledges these three different levels of insight. From MTA you’ll be able to extract campaign and user level insights, from MMM you’ll be able to extract channel and source level insights and from incrementality testing you’ll be able to extract causal impact estimates and activity level insights. Triangulation aims to cancel out any limitations of all three methods by taking advantage of their individual strengths, allowing you to extract the best insights from all three methods.

In practice, with measurement triangulation, approximations will need to be manually elicited from the insights, however, one of the benefits in this approach for teams is that it can support a gradual movement towards contribution-based measurement reporting, without wholesale moves away from attribution-modelled reporting.

When should you consider incrementality testing?

If you’re considering a new marketing channel, or changing your marketing mix, then incrementality testing is crucial to understand the impacts. You can use incrementality testing to determine the causal relationship between marketing activities and desired outcomes, such as sales, conversions, or brand awareness.

This testing methodology is essential when you need reliable and unbiased insights to optimise marketing budgets, allocate resources effectively, and maximise the return on investment from your marketing spend.

In addition to incrementality testing, businesses with complex and multi-channel marketing strategies are better suited to Marketing Mix Modeling (MMM). MMM is also more effective for businesses with longer sales cycles, where there are multiple touchpoints across various channels influencing customer behaviour.

On the other hand, MTA can be more suitable for earlier-stage businesses, those with shorter sales cycles, or those particularly those heavily reliant on digital channels and online advertising platforms for new customer acquisition and growth. These businesses can benefit from the granular insights provided by MTA, which allows them to optimise digital advertising campaigns in real time and measure the immediate impact of their marketing efforts.

Which digital advertising channels are well suited to incrementality testing?

While all digital advertising channels can benefit from incrementality testing, some are particularly well-suited due to their inherent characteristics, and others are popular targets due to the potential to see conflicting digitally attributed performance reported against them.

For most marketers, coming at this with experience with digital attribution, we see broadly two challenges:

- Short-term – Advertising designed to to capture customers at the point of need, or advertise a sale promotion, or just generally drive short-term results is over-attributed in MTA reporting. Channels such as Microsoft or Google paid search and paid shopping ads (PLAs) are good examples here.

Tests on this type of activity are useful to demonstrate the true incrementality to the business’ top line revenue numbers, rather than self-serving platform reporting metrics. Testing for the impact of paid search brand bidding is a good example here, among others.

- Long-term – Advertising designed to build mental availability and brand awareness for customers ahead of their point of need, often called “above the line”, is typically under-attributed in digital attribution. Channels such as TV, Radio, out-of-home, and some digital social (Facebook, Instagram, TikTok) and video (YouTube) advertising are good examples here.

Tests on this type of activity are useful to demonstrate that despite the under-reporting in digital attribution, these channels can contribute over the short and medium term.

What if the insights from an incrementality test don’t align with insights from multi-touch attribution?

Differences in the measurement reporting from multi-touch attribution, media mix modelling and incrementality testing are to be expected. Incrementality testing and digital attribution measure similar things but in different ways. MTA will measure all sales associated with ad exposure, whereas incrementality testing aims to measure only the additional causal sales as a result of advertising. You may often find, but not always, that incrementality testing reports lower ROI estimates than MTA. This is because MTA will generally attribute more sales to an ad than what testing will. The Return On Ad Spend (ROAS) reported as a result of incrementality testing is often referred to as the incremental Return On Ad Spend (iROAS).

How to calculate incrementality and lift

| Key |

|---|

| Σ = total sum |

| | . | = absolute value |

In the context of testing, lift is equivalent to the positive difference between treatment sales and baseline sales. Baseline sales are simply an approximation to what sales would’ve been in absence of the treatment or marketing intervention. If the objective of your test is to observe an increase in sales, this may often be referred to as “lift” or “uplift”.

Lift =|Treatment sales – Baseline sales|

Incrementality refers to the incremental lift that advertising spend provides to overall sales or conversions. Incrementality provides the percentage of sales or conversions received as a direct result of an advertising campaign. Incremental lift analysis looks specifically at whether an individual advertising campaign was effective and how effective, by determining what sales revenue would have been without the ad campaign.

Incrementality = Causal Impact / Σ Sales = Σ Lift / Σ Sales

Overall, lift, causal impact and incrementality are similar concepts with different meanings. Lift tests and incrementality tests are often used interchangeably, the reason for this is that both tests offer the same thing. A lift test will provide both lift and incrementality estimates and similarly an incrementality test will provide both lift and incrementality estimates. The three key questions you should be asking before conducting yourself, or hiring a vendor to conduct a lift test are as follows:

- Can you estimate the total lift?

- Can you estimate the incrementality?

- How do you estimate base sales?

The estimation of base sales is the key characteristic of an incrementality test. A poor approximation to base sales will yield unrealistic results. As both lift and incrementality are dependent upon this approximation, it’s essential to get it right!

Aims of incrementality testing

In short, incrementality testing aims to estimate the true performance of your marketing efforts, which in turn allows you to increase revenue through the best long term allocation of budgets and resources. These aims become particularly evident when testing the performance of newer advertising channels, or if as a brand you are moving from a performance focus to a balanced brand and performance split.

Without a solid testing methodology, marketers might roll out a new channel across all advertising regions in hopes that the channel will perform well. Usually the budget required to do this is relatively large, and if a solid measurement plan isn’t in place, or multi-touch attribution is used to measure success and this fails to capture it, then the conclusion may be that the spend was ineffective. There are absolutely risks of this opinion being formed when activating on new or brand-based advertising channels or campaigns, as returns may not be immediately obvious. .

By conducting an incrementality test, in which only one advertising region is exposed to the new channel, you can gain insights into the performance of this channel before rolling out the channel across all geographies.

If the new channel, creative or campaign doesn’t perform well in one region, it’d be sensible to assume it won’t across more regions and hence it’d be time to re-evaluate the advertising strategy for this channel. Alternatively, if the new channel does perform well, you’d be rolling out this channel with much more confidence than you’d have if you didn’t conduct a test.

Alternatively you may have a hypothesis that your Google Ads paid search budget for pure brand terms is too high and that your net sales won’t be affected by reducing budget. As opposed to just reducing budget across all advertising regions and hoping sales don’t take a drastic hit, you could conduct an incrementality test to prove or disprove this hypothesis. By conducting an incrementality test, in which only one advertising region is exposed to a reduced Google Ads paid search budget, you can gain insights into how this affects sales in one region before rolling out the channel across all regions.

If reducing budget doesn’t significantly affect sales, then you’d have evidence to suggest this won’t significantly affect sales in other regions. Alternatively, if sales are significantly affected in one region, then you’d have evidence to suggest reducing budget in all regions will significantly affect sales. If this is the case, the amount of sales you’d have lost by reducing budget in all advertising regions would significantly outweigh the sales lost by reducing budget in one region first.

In essence, incrementality testing aims to reduce the reliance on guesswork when implementing changes to your marketing strategies. Additionally, if your business advertises using multiple channels, you may consider Marketing mix modelling. If you do, incrementality testing helps to inform MMM and in turn aims to reduce any uncertainty associated with the outputs of an MMM.

Incrementality test designs

The test design simply refers to how you choose to separate your treatment and control groups. There are many different ways to implement incrementality testing, from the test design to the statistical techniques used, there is no universally accepted way of conducting an incrementality test. Each type of test will have its own strengths and limitations associated with it. In this section, we’ll discuss various different types of test designs and consider their strengths and weaknesses.

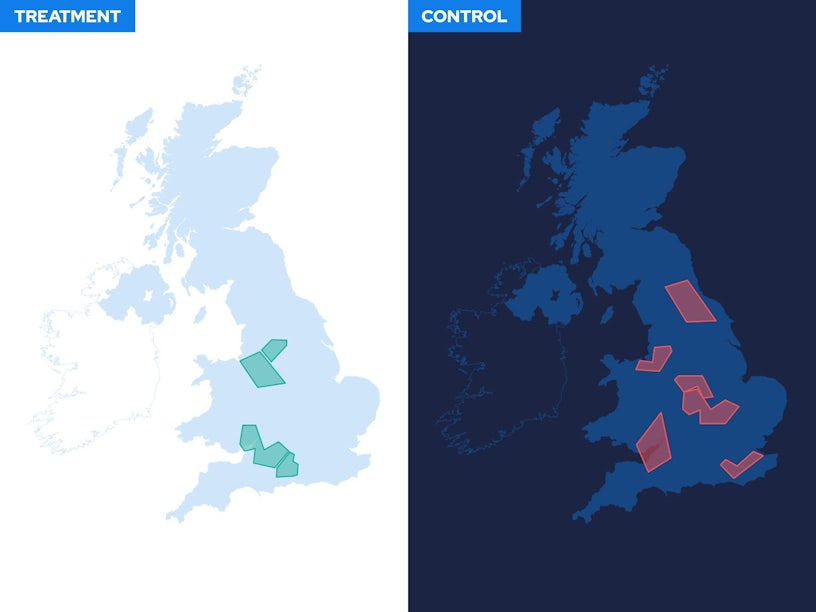

Geo experiments

One of the most frequently used forms of test design is geo-experiments. A geo-experiment, within the realm of incrementality testing, involves conducting controlled experiments within specific geographical regions to measure the incremental impact of marketing initiatives. In a geo-experiment, different geographic areas are selected as treatment and control groups, where the treatment group is exposed to the marketing intervention while the control groups remain unaffected. By comparing the outcomes between these groups, you can estimate the true causal effect of your marketing efforts within each geographic region.

One of the key strengths of geo-experiments is their ability to provide localised insights, allowing you to understand regional variations in consumer behaviour and tailor your marketing strategies accordingly. Geo-experiments are relatively cost-effective and less prone to external confounding factors compared to broader market-wide experiments.

However, a major weakness of geo-experiments is the potential for spill-over effects, where marketing activities targeted at one region inadvertently impact neighbouring regions, leading to biased results. Additionally, geo-experiments may not always capture the full extent of complex consumer behaviours, particularly in markets with significant cross-regional interactions or where digital footprints transcend geographical boundaries.

User testing

Another form of test design is by user. User-based incrementality tests involve dividing users into randomised treatment and control groups to measure the incremental impact of marketing efforts on individual users. By comparing the outcomes between these groups, you can estimate the causal effect of your marketing activities on user behaviour.

One of the key strengths of user-based incrementality tests is their ability to provide insights into the impact of marketing on individual users, allowing for personalised targeting and messaging strategies. Additionally, user-based tests are less susceptible to external factors that may influence regional or market-wide experiments, providing more accurate and reliable results.

However, a major drawback of user-based tests is the potential for selection bias, where certain types of users may be more likely to respond to marketing interventions, leading to skewed results. Moreover, user-based tests may require larger sample sizes to detect statistically significant differences in user behaviour, especially for rare outcomes or niche market segments.

Ad platforms with large identity graphs can offer such tests, as can organisations which have large audience data pools, however, geography-based experiments are more often chosen for testing media channels in the context of new customer acquisition as often third-party measurement vendors and platforms cannot access these user attributes.

Product incrementality testing

The last form of test design we’re going to talk about is product-based. Product-based incrementality tests involve dividing products into treatment and control groups to assess the incremental impact of marketing efforts on product sales. By comparing sales outcomes between these product groups, you can estimate the causal effect of marketing activities on product performance. One of the main strengths of product-based incrementality tests is their ability to evaluate the impact of marketing campaigns on specific products or product categories, enabling you to tailor your marketing strategies accordingly.

Additionally, product-based tests provide insights into the differential effects of marketing across different product lines, helping you allocate resources effectively. However, a potential flaw of product-based tests is the need for sufficient product volume and diversity to generate meaningful results. In cases where certain products have low sales volume or limited variability, detecting significant differences between treatment and control groups may be challenging. Moreover, product-based tests may not account for cross-product effects or interactions, which could affect the interpretation of results.

Synthetic controls

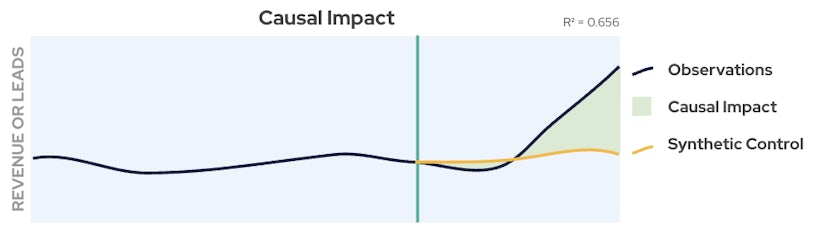

Although synthetic controls are not unique or limited to any one of the above types of experiments, their consideration in the design of a test is crucial depending on the next best alternative for providing a control to an experiment.

Synthetic data is simply machine-generated data which is designed to simulate real-world observed data. In the context of providing a test control, this is often a modelled expectation based on large volumes of historical data, which captures a number of variables including seasonality.

Synthetic controls are most often found in geo experiments. As well as being a more popular and accessible media test approach, geo experiments can also fall foul of issues around control geography selection, making the case for synthetic controls stronger.

In geo experiments, it’s our approach, and a standard industry one to model anticipated sales inside the treatment geography prior to exposure, and then to compare actual treatment results versus the estimated control. Of course, this approach creates additional considerations, many of which are controlled for:

- Externalities occurring mid-test, for which the modelled data would not have previously accounted for. Synthetic controls consider a number of variables and are generated from numerous geographies, therefore they can take into account the effect of expected externalities at the point of generation. However, to capture ongoing real-world externalities, models can be refreshed as new data becomes available so that large impacts in the data which are not explainable by the experiment aren’t accidentally attributed to it.

- Accuracy of modelled data, as the control is synthetic. Back-testing is a common solution to evidencing the efficacy of a synthetic control – where some actual real-world observations are held back at the point of model generation, and then compared to the model prior to its use. For the observations which fall in this backtested period, the model can show its level of ‘fit’ and predictive accuracy. A common statistic used to quantify goodness of fit, which we implement, is the root-mean-square-error (RMSE). The smaller the RMSE the better the predictive accuracy of the synthetic control.

The alternative approach to synthetic controls is called “matched market” testing, whereby similar geographies are selected each as treatment and control and these are evaluated against one another. Challenges around audience leeching between markets are just one reason this is less favourable — as such challenges are difficult to mitigate. Using a synthetic control accounts for this to a certain degree, but care should still be taken.

Incrementality testing frameworks

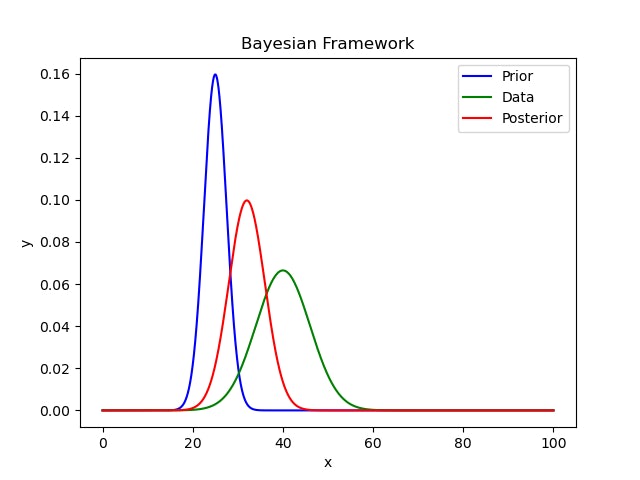

Frequentist and Bayesian incrementality tests represent two distinct statistical approaches to assess the causal impact of marketing interventions.

In a frequentist approach, the focus is on estimating parameters of interest based on observed data, without incorporating prior beliefs or uncertainties. Frequentist tests typically rely on hypothesis testing and confidence intervals to make inferences about the true effect of marketing activities. For example, in a frequentist test, the null hypothesis might assume that there is no difference in outcomes between the treatment and control groups. Statistical significance is then determined by a measure, known as the p-value, that helps determine whether the results of this test are likely to have occurred by random chance or by a direct result of the treatment. One major benefit to testing under a frequentist framework is that frequentist tests provide straightforward interpretations and are often perceived as more objective, as unlike a Bayesian test, they do not involve subjective priors. However, one major drawback to incrementality testing under a frequentist framework is that frequentist tests may struggle with complex models or small sample sizes.

Alternatively, Bayesian incrementality tests explicitly incorporate prior beliefs or information about parameters of interest, allowing for the integration of prior knowledge with observed data to make inferences. Bayesian methods provide posterior distributions (a combination of the prior distribution and the data) that represent the updated beliefs about parameters after observing data, enabling a more nuanced understanding of uncertainty and variability.

Incrementality testing under the Bayesian framework tackles the lack of flexibility as seen in frequentist tests. Bayesian methods can handle complex models, small sample sizes, and hierarchical structures more effectively than frequentist approaches because of this ability to incorporate prior understanding.

We sometimes explain priors as “guardrails” in parameter estimation. The wider we make these “guardrails” the more we allow the media data to influence estimation. For an advertising channel with incrementality test data, it’s sometimes sensible to make these “guardrails” narrower for an appropriate estimate based on causal results. However, one major drawback of the Bayesian approach to incrementality testing is that it can be very complex and computationally expensive to conduct compared to the frequentist alternative.

In summary, frequentist and Bayesian incrementality tests offer distinct advantages and limitations, and the choice between them depends on the specific requirements of the analysis, the available data, and the researcher’s preferences regarding uncertainty and prior knowledge incorporation.

It’s our preference to utilise a Bayesian approach to media effectiveness measurement at Impression. We take forward learnings from incrementality testing into our marketing mix models, which the flexibility of Bayesian statistics allows us to do.

Deriving the control group(s)

In incrementality testing, the derivation of the control group is a key characteristic. When deriving the control group, you could take an A/B test approach, use weighted averages or conduct some time series analysis.

A/B testing

An A/B test requires a comparison of a treatment group (A) with a control group (B). The key difference between an A/B test and the following alternatives is that the control group isn’t artificially designed. In the context of a geo-based A/B test, the region exposed to the marketing intervention will be compared with a different region, which wasn’t exposed. Therefore the control group in A/B testing is simply another group which hasn’t been specifically designed to mimic the treatment group.

The major drawback with this approach, in the context of a geo-experiment, is that you won’t be able to separate the percentage of lift generated by the treatment from that generated as a result of regional differences. Choosing a region which behaves most similarly to the treatment region is one way to control for this, however, the influence of regional differences simply can’t be quantified in a geo-based A/B test.

Weighted averages

Weighted averages offer an alternative, in which the control group is artificially designed to mimic the treatment group in absence of the treatment or marketing intervention. To conduct a test using weighted averages, you require several control groups. A weighted average of these control groups is then taken and the weights are chosen such that this average best mimics the treatment group during the pre-treatment phase. Post-treatment, you must continue to observe the control groups in order to generate new forecasts of what the treatment group would’ve been in the absence of the treatment. One drawback to this approach is that, in some cases, you may find there does not exist weights such that the weighted average of the given control groups mimics the treatment group very well. If this is the case, it is recommended that you turn to an A/B test approach or implement time series methods.

Time series methods

Similarly to weighted averages, time series methods generate a synthetic control group. However, time series methods don’t necessarily require several additional control groups. Time series methods involve modelling the treatment group during the pre-treatment phase and using this model to forecast what the treatment group would’ve been in absence of the treatment. There are various ways to model time series data such as linear regression and ARIMA. The main benefit of using this model over weighted averages is that this method often requires much less data. However, this method is often harder to implement as it forces you to account for trends and seasonality and other externalities, which without experience in statistical modelling can be quite difficult.

Example of an incrementality test

Background

- A global company specialising in garden appliances, called “Under the Hedge”, is considering investing in advertising, direct to consumer for the first time, on Meta in the US.

Methodology

- To inform this decision, Under the Hedge decided to conduct a geo-based incrementality test, using a synthetic control group, to estimate the incremental impact of advertising on Meta.

- Treatment group – A region exposed to Meta advertising. Under the Hedge decided New York was to be the treatment group.

- Control groups – Region(s) not exposed to Meta advertising, Under the Hedge decided to use other mainland US states within reasonable proximity to New York, such that variance in consumer behaviour across these states is assumed minimal.

Pre-Treatment

- 3 years of historical revenue data for the relevant US states, where possible, is used for analysis. A weighted average of all other control regions is then taken to model the revenue generated in New York. The weights are chosen such that New York revenue is best mimicked.

- Let us propose the following weights are optimal for mimicking New York revenue.

| Region | Pennsylvania | Vermont | Massachusetts |

|---|---|---|---|

| Weight | 0.4 | 0.3 | 0.3 |

During treatment

- Meta advertising is then switched on in New York and revenue generated in New York, Pennsylvania, Vermont and Massachusetts is to be observed.

- It is beneficial to monitor the test at equidistant time intervals.

Post-treatment

- The observed revenue data for the control groups is then used to approximate what New York revenue should’ve been in absence of the Meta advertising.

- Suppose the following data, for a given month, was observed:

| Region | New York | Pennsylvania | Vermont | Massachusetts |

|---|---|---|---|---|

| Revenue | $12,000 | $16,000 | $10,000 | $11,500 |

| New York predicted ≅ 0.4 x 12,000 + 0.3 x 10,000 + 0.3 x 11,500 |

| New York predicted ≅ $4,800 + $3,000 + $3,450 = $11,250 |

- Calculate the uplift.

Uplift = $12,000 – $11,250 = $750

- Extract insights from results.

- Continue to monitor for an extended period after the test to assess any sustained effects and account for potential lagged impacts.

Results and interpretation

- Results suggest that switching on Meta ads has caused an increase in sales of $750 this month in New York alone.

- Insights gained from the incrementality test guide decision-making processes, informing future marketing investments and resource allocations based on evidence-based evaluations of channel effectiveness.

Common considerations of geo-testing

If you find yourself in a scenario where you can’t simply “switch off” advertising in a specific geo, campaign targeting is something to watch out for. When reducing advertising spend, it is recommended that you do this on a geography level and not just reduce the spend immediately. For platforms like Meta, you’ll often find that users who are “most likely” to convert will be prioritised over anyone else. Although this is strategic for the campaign in question, for the purposes of measurement it can often warrant insignificant results.

For example, say you wish to reduce TikTok spend by approximately 50% and observe the incremental loss in sales, you might find that reduction in spend will decrease the advertising reach and only focus on exposing those most likely to convert as opposed to exposing new users. To counter this, reduce spend by only exposing 50% of the region in question. If advertising spend isn’t uniformly distributed across that region, then only expose parts of that region such that spend is approximately reduced by 50%.

Lastly, when conducting a geo-based incrementality test you should consider contamination between geographic regions. This occurs when a large number of users commute between the treatment and control regions for long enough to become exposed. Using a synthetic control accounts for this to a certain degree, but care should still be taken.

How to interpret the results of your incrementality test

Interpreting the results of an incrementality test involves understanding the limitations of statistical testing. Rather than providing definitive proof, these tests aim to evaluate the strength of evidence for or against a hypothesis. Therefore, it’s essential to recognise that testing outcomes offer estimates rather than exact values. In Bayesian analysis, credible probabilities, such as 95% or 99%, indicate the confidence level that the observed results are indeed incremental and not merely due to chance. On the other hand, frequentist analysis typically involves reporting p-values, which provide insights into the statistical significance of the findings but do not directly quantify the degree of confidence in the results’ accuracy.

It’s crucial for practitioners to approach test interpretation with a nuanced understanding of statistical inference. While statistical tests offer valuable insights into the effectiveness of marketing strategies, they inherently involve uncertainty and assumptions. By acknowledging these factors and carefully considering the context of the test, analysts can derive meaningful conclusions and make informed decisions regarding resource allocation and campaign optimisation strategies.

What if your channels are not incremental?

When it comes to incrementality testing, if a channel isn’t significant now, it doesn’t mean it never will be! When faced with insignificant results, there are multiple different options to consider. First, seasonal fluctuations may render certain channels ineffective at the specific time of the test. In such cases, it might be prudent to temporarily suspend advertising on those channels and reallocate resources to more opportune avenues.

Alternatively, if a channel consistently underperforms, experimentation with different advertising strategies could be beneficial. By conducting another round of testing with modified approaches, you can gain insights into consumer preferences and tailor strategies accordingly.

Specifically, if a test is inconclusive and/or a channel appears to be ineffective, the factors to consider are:

- Execution – are you advertising something with a short-term sales focus or a long-term brand-building focus, and is this appropriate for the channel?

- Offer – if you are offering a sales promotion, or highlighting your ordinary pricing, are you competitive?

- Creative – visuals, video and copy all have an impact – have these been tested to ensure you are putting your best effort forward?

- Inventory – for some channels, you may be able to specify with a greater deal which inventory you are buying, or for which audience. Have these factors been considered and tested also?

Finally, in situations where determining the root cause of channel ineffectiveness proves arduous, reallocating resources to alternative channels with proven incremental value may be the most efficient solution. Ultimately, by remaining agile and responsive to performance data, you can adapt your marketing strategies to achieve your campaign objectives.

Next steps if your channels are incremental?

In the context of conducting a geo-based incrementality test to measure uplift in a new potential channel’s effectiveness by increasing budgets, the obvious next step is to expand your marketing efforts to more geographies. Instead of walking in blind, you’ll now have an idea of what to expect from each geography.

In the context of reducing budget for an existing channel, if you observe a significant decrease in lift you should plan to increase that budget again. You’ll have to take into account the delayed effects of advertising here, as increasing the budget again is unlikely to take effect instantly.

On the other hand, if you observe no significant change in lift, it may well be in your best interest to consider reducing budget in more than just the treatment geography, and to consider re-deploying this budget into different activity elsewhere – via another experiment if possible.

Lastly, if conducting a geo-based incrementality test to measure uplift in an existing potential channel’s effectiveness by increasing budgets, you may want to consider gradually increasing the budget for this channel across all geographies. Implementing MMM will further develop your understanding of channel saturation, which describes the diminishing return on investment available as you continue to over-invest in a particular channel and/or audience.

How long should an incrementality test last?

In incrementality testing, the duration of the test plays a critical role in ensuring the reliability and robustness of the results. While there’s no definitive timeline, aiming for a duration of 6 to 12 weeks is often recommended for optimal insights. This time frame allows for the observation of consistent trends, offering greater confidence in the validity of the findings.

Moreover, tests designed to measure declines in revenue should include periodic risk assessments to evaluate whether the potential loss outweighs the insights gained. If necessary, tests can be halted prematurely, but such decisions should be accompanied by a careful consideration of the test’s consistency and potential future use for calibration in MMM.

Even after the cessation of treatment, continued observation can provide valuable insights into any lagged effects, allowing for a more comprehensive understanding of the channel’s impact over time.

How to overcome challenges with incrementality testing

In the realm of marketing, incrementality tests, while valuable, come with inherent limitations that may negatively impact the accuracy of your media effectiveness insights. One common challenge is the inadequate fit of synthetic controls, which can skew results and lead to misinterpretation.

To address this, marketers can explore alternative methodologies, such as A/B testing, to validate findings and ensure robustness. Additionally, prior to testing, analysis of goodness-of-fit statistics and hold out testing can help you to generate a sufficient synthetic control.

Moreover, disparities between treatment and control groups may introduce bias into the analysis, necessitating the utilisation of rigorous control selection processes to mitigate individual differences. Additionally, the generalizability of findings from treatment geographies to the broader population may be uncertain, prompting the execution of multiple tests across diverse geographies to capture geographical variations. Furthermore, lift tests often provide short-term insights, limiting their applicability to long-term strategic planning.

To extend the utility of lift test results, marketers can integrate them with Marketing Mix Modeling (MMM) to extrapolate findings and glean insights into medium- to long-term impacts, thereby enhancing the depth and breadth of their strategic decision-making processes. By acknowledging and overcoming these limitations, you can harness the full potential of incrementality testing to optimise your marketing strategies and drive sustainable growth.

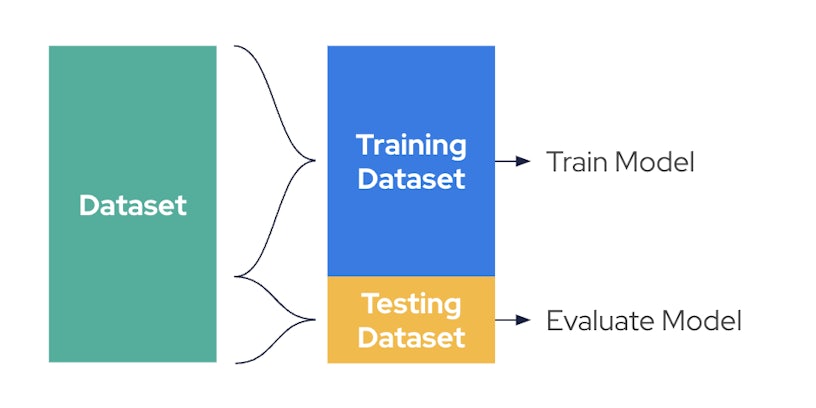

Hold out testing for the synthetic control

There are multiple measures you can use to validate the predictive accuracy of your synthetic control. A few of these measures include leave-one-out-cross-validation (LOO-CV), the Bayesian Information Criterion (BIC), R-squared and hold out testing.

- LOO-CV is a method for estimating the predictive performance of a statistical model, such as the synthetic control group in incrementality testing. LOO-CV helps to estimate how well a model will generalise to new, unseen data, hence providing insights into the predictive accuracy of your synthetic control group.

- BIC on the other hand is a criterion used for model selection. It balances the goodness of fit of the model with the complexity of the model, penalising models that are more complex. Lower values of BIC indicate a better balance between model fit and complexity, with the best model being the one with the lowest BIC value. This is particularly useful when using weighted averages to derive your synthetic control as it aims to quantify the point in which including fewer control regions in your model outweighs model accuracy.

- Alternatively, R-squared is used to assess how well your synthetic control mimics the treatment group during the pre-treatment phase. R-squared takes values between 0 and 1, 0 indicating poor fit and 1 indicating perfect fit. An R-squared of 0.7 indicates that 70% of the variation in the treatment group can be explained by the synthetic control group. Care must be taken when relying solely on R-squared to assess model fit as it doesn’t penalise for model complexity. This means that you may encounter overfitting, particularly when you observe an R-squared close to 1.

- Lastly, as opposed to using numerical goodness-of-fit statistics to validate the predictive accuracy of the synthetic control, you may wish to conduct a hold out test. Hold out testing involves splitting your pre-treatment data into two parts: train data and test data. The train data should be between 70% and 80% of the total pre-treatment data. The remaining 20-30% of total data is to be the testing data. You would then fit a synthetic control group to the train data and use it to forecast the test data. As the test data is unseen by the synthetic control, comparing these forecasts against what actually happened can provide insights into the predictive accuracy of the actual synthetic control. If the forecasts are close to the test data, you’ll have confidence that the synthetic control will perform well. This is also sometimes referred to as back-testing when the hold out is over a period of observations pre-test.

How does incrementality testing complement Marketing Mix Modelling?

As mentioned earlier, incrementality testing serves as a valuable complement to Marketing Mix Modeling (MMM) by enhancing the calibration process and reducing uncertainty in the model’s predictions. By isolating the incremental impact of specific marketing interventions, incrementality testing provides valuable insights that inform and refine the MMM model. The results of incrementality tests can help identify channels or tactics with the largest uncertainty in their estimated impact, prompting the model to recommend further testing or adjustments to improve accuracy.

Ultimately, the integration of incrementality testing with marketing mix models enhances the businesses’ effectiveness in understanding the impact of marketing activities and optimising resource allocation.