October was certainly a busy month for SEO. With the launch and completion of both the October 2023 core update and the October 2023 spam update, we also ended the month with another core Google algorithm update on the horizon for November.

In this article, we’ve covered what these updates involved and their impact. Amongst these major updates, we’ve also covered other topics such as the latest update for Google’s Rich Results Test tool, recent amendments made to rich results and the E-E-A-T Knowledge Graph update.

Allow our traffic light system to guide you to the articles that need your attention, so watch out for Red light updates as they’re major changes that will need you to take action, whereas amber updates may make you think and are worth knowing but aren’t urgent. And finally, green light updates which are great for your SEO and site knowledge but are less significant than others

Keen to know more about any of these changes and what they mean for your SEO? Get in touch or visit our SEO agency page to find out how we can help.

In this post, we’ll explore:

- Google completes rollout of October 2023 core algorithm update

- Google releases the November 2023 core update

- Google rolls out October 2023 spam update

- Google adds support for paywalled content in Rich Results Test tool

- Inside Google’s massive 2023 E-E-A-T Knowledge Graph update

- Google rolls out about this image and more SGE links

- Google Rich Results for events removed from search snippets

- Google updates favicon search documentation

Google completes rollout of October 2023 core algorithm update

Google completed the rollout of its October 2023 core search update on the 19th of October, which is designed to enhance search rankings by emphasising high-quality content. This core update targets the fundamental search algorithms and aims to elevate trustworthy and authoritative content while demoting low-quality websites.

The implications for website rankings can be significant following Google’s core updates, with some sites experiencing increased visibility and traffic, while others may see drops in rankings. During the active rollout of such updates, daily fluctuations in search results are common as Google’s algorithms adjust. It’s advisable to be patient and regularly monitor site analytics.

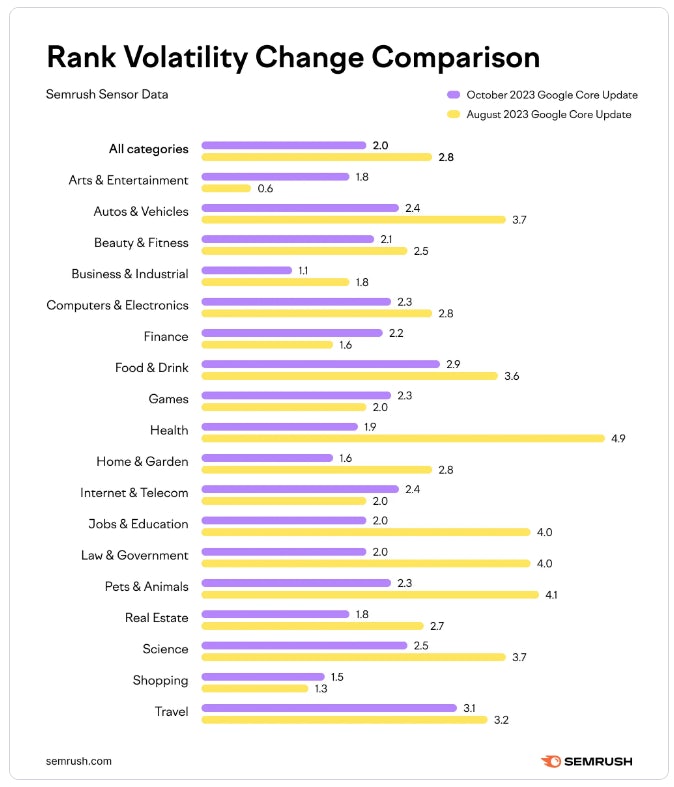

Data collected by Semrush shows the relative change in rank volatility during the October 2023 Core Update as compared to the August 2023 Core Update. For websites negatively impacted by core updates, Google suggests focusing on content quality, expertise, and user experience. Identifying areas for improvement by adding high-quality content or making website enhancements can aid in recovery.

Google launches core updates to enhance the overall quality of its search results and to counteract attempts to manipulate rankings using questionable SEO practices. These updates are released multiple times a year, each targeting different ranking factors and having varying impacts.

To navigate core updates successfully, website owners should:

- 1. Prioritise creating helpful, factual content that establishes expertise and authority.

- 2. Optimise their websites for a positive user experience on various devices.

- 3. Regularly monitor search analytics and performance.

- 4. Make incremental improvements over time rather than reacting solely during updates.

- 5. Keep in mind that quality content and websites are rewarded in the long term.

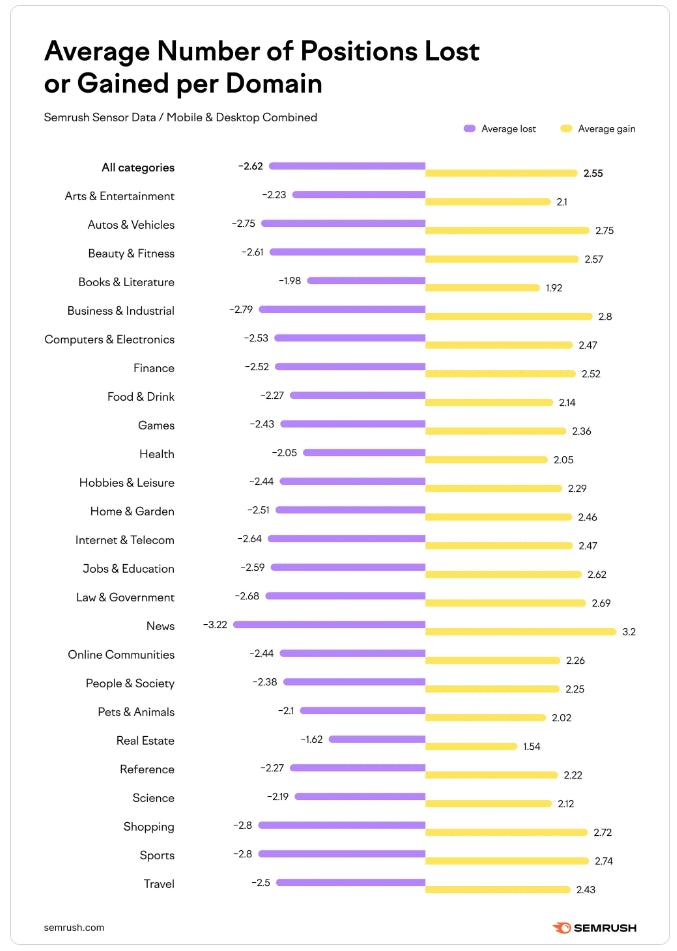

In comparison to the core update in August, the October 2023 update was considered slightly less volatile. In an analysis from Semrush, it was revealed that the average number of positions gained or lost during the October 2023 Core Update was roughly 2.5 positions. Whereas in August it was just over 3.

While core updates introduce uncertainty, they are ultimately intended to enhance the quality of Google Search. With ongoing refinement and the delivery of valuable content, websites can maintain visibility even during significant algorithm shifts.

Google releases the November 2023 core update

The latest core algorithm update, which is the fourth in 2023, follows a series of previous updates this year. Here is the current timeline of updates:

- March 2023 – The first core update

- Commenced March 15th

- Concluded March 28th

- August 2023 – Core update

- Commenced August 22nd

- Concluded September 7th

- October 2023 – Core update

- Commenced October 5th

- Concluded October 19th

Regarding the core update, Google has stated that this particular update represents an enhancement to a different core system compared to the previous month. Google has reiterated that its guidance for core updates remains consistent for both.

Additionally, Google has announced an upcoming reviews update scheduled for the following week. This update signifies a shift in Google’s approach, as they will no longer provide periodic notifications of improvements to their reviews system. Instead, these improvements will occur on a regular and continuous basis.

Why is this significant?

When Google updates its search ranking algorithms, your website’s performance in search results can either improve or decline. Being aware of these updates allows us to pinpoint whether any changes in your website’s ranking are due to modifications you made or alterations in Google’s ranking algorithm. With the recent core ranking update from Google, it’s advisable to monitor your website’s analytics and rankings closely in the coming weeks to understand the impact of this update on your site.

Google rolls out October 2023 spam update

Hours before Google announced its third core algorithm update of the year, the search engine rolled out the October 2023 Spam Update. This update was launched on the 4th of October and was completed on the 20th of October.

The October 2023 Spam Update is a global update designed to address various types of spam that have been reported by community members in Turkish, Vietnamese, Indonesian, Hindi, Chinese, and other languages. Google anticipates that this update will result in a decrease in the amount of spam in search results, especially those sites and webpages that utilise tactics like:

- Cloaking: Cloaking is a technique where a website displays one version of a webpage to search engines and another one to visitors. This tactic is typically employed to manipulate search rankings.

- Hacked content: Hacked content is content that is placed on a site without permission and often as a result of a hacker exploiting a site’s vulnerabilities.

- Auto-generated content: Autogenerated content that adds little or no value to users is considered as spam.

- Content scraping: Content scraping is when content has been taken from a website and added to another site without permission.

Why should you care?

Google is committed to actively combating spam techniques while prioritising high-quality content that provides valuable information to users. As a best practice, adhere to the E-E-A-T principles to improve the likelihood of boosting your website’s ranking in search results.

Google adds support for paywalled content in Rich Results Test tool

Google has introduced an update to its Rich Results Test tool, enabling the validation of structured data markup for paywalled content. This development is designed to assist publishers in accurately indicating subscription-based content on their websites.

Google is refining its approach to indexing and displaying paywalled content in search results, aiming to direct users to relevant articles while preventing practices like “cloaking,” where websites display different content to Google compared to users.

This update comes in response to concerns raised by publishers about Google products like Search and the AI chatbot Bard surfacing paywalled content without compensation. It’s important to note that while adding structured data can clarify what is behind a paywall, it does not guarantee that paywalled content will appear in search results or AI-generated summaries. Other factors like site crawlability and indexation also play a role in whether Google displays these pages.

Publishers can employ structured data markup, specifically schema.org markup, to specify which sections of a webpage are behind a paywall. To do this, they can add JSON-LD or microdata to tag each paywalled block, using a code like:

***html<div class="paywall">This content requires a subscription.</div>

***

The markup identifies the div as non-free access:

***json

"hasPart":{

*"@type": "WebPageElement",

*"isAccessibleForFree": "False",

*"cssSelector": ".paywall"

}

***With this update, Google’s Rich Results Test tool can verify the correct implementation of these paywall markup schemes.

The validation support pertains specifically to properties recommended by Google, such as “isAccessibleForFree” and “cssSelector.” This markup can be applied to various content types, including articles, blog posts, courses, reviews, messages, and other CreativeWork content.

For pages with multiple paywalled sections, publishers can specify multiple cssSelector values in an array. Google’s documentation provides examples for both single and multi-paywall implementations.

In conclusion, while markup may not resolve all issues related to Google’s use of paid content, it enhances structured data, providing more transparency, which benefits both publishers and Google. Google offers troubleshooting tips for publishers having difficulties implementing the markup in its official help document.

Inside Google’s massive 2023 E-E-A-T Knowledge Graph update

Google’s latest major update to its Knowledge Graph was launched in July and has since become known as the ‘Killer Whale’ update. Despite being launched in July 2023, this Knowledge Graph update has had implications for the subsequent August 2023 and October 2023 core updates.

The Killer Whale update focused on the refinement of subtitles for people within the Knowledge Graph. There were substantial changes to the classification of roles with the intent to identify those that exhibit strong E-E-A-T signals. In response to this, there has been a noticeable increase in Person entities labelled as ‘Writer’ or ‘Author’. This heightened emphasis on writers is likely to reflect their perceived authority within their respective domains.

Aside from the focus on Person entities, this update highlighted Google’s diminishing reliance on traditional knowledge sources. Google is moving away from exclusively relying on man-made third-party sources like IMDB.

Sometime in the future, it is likely that Google will implement a similar decline in reliance on Wikipedia for Corporation entities. For this reason, this update emphasises the importance of diversifying your knowledge panel strategy and fostering organic growth, rather than relying solely on Wikipedia or other human-curated sources.

Google rolls out about this image and more SGE links

Google has begun introducing the “about this image” feature, which they initially announced in May. Additionally, they are enhancing the Search Generative Experience’s AI-generated answers by including descriptions of information sources along with links to reputable websites that discuss those sources.

This means that the AI-generated source descriptions now come with added links to high-quality sites for supporting information.

Google has launched the “about this image” feature in Google Search for English language users worldwide. This feature provides detailed information about an image, including:

- Image history: “About this image” reveals when an image was first detected by Google Search and whether it was previously published on other websites long before. This can help prevent the misrepresentation of older images as current events.

- Usage and descriptions: Users can explore how an image is utilised on various web pages and access information from sources such as news outlets and fact-checking sites. This data can be valuable in assessing the authenticity and context of the image.

- Image metadata (including AI): The feature also displays available metadata provided by image creators and publishers, including details that indicate whether the image was generated or improved by artificial intelligence (AI). All AI-generated images by Google will include this metadata in the original file.

Google Rich Results for events removed from search snippets

During the Google Search Central live SEO event in Zurich, Google confirmed the removal of event rich results from appearing under search result snippets. This confirmation came from Ryan Levering of Google during the Q&A session.

In recent weeks, several SEO professionals noticed the absence of event rich results. Both a German SEO blog and others, including myself, reported on this development. Initially, it was unclear whether this was a bug or a deliberate action by Google. However, it was later clarified that Google intentionally removed event rich results.

This change follows a series of adjustments by Google, including reducing the visibility of FAQ rich results and entirely removing “HowTo” rich results from search. Google has also eliminated indented results as an interface feature but introduced support for vehicle listing structured data, at least for now.

The significance of this change lies in the potential impact on website traffic, as event search result snippets no longer feature event rich results. This might lead to a decrease in click-through rates. It’s important to keep in mind that while implementing Google structured data can yield short-term benefits, its effectiveness may change in the future. Whether it’s worth the effort depends on individual circumstances and priorities.

Google updates favicon search documentation

Google has made updates to its favicon search developer documentation, specifically removing the Favicon user agent section and providing clarification on the requirements for Google to index and display favicons in Google Search.

The key points from the update are as follows:

- Google now emphasises that website owners must allow both the Googlebot and Googlebot-Image user agents to crawl, enabling the indexing and display of site favicons in Google Search.

- Both the favicon file and the home page must be crawlable by Googlebot-Image and Googlebot, without any blocking in place.

- Google has eliminated information related to the Google Favicon HTTP user-agent string from its documentation, as it is no longer in use. However, this change does not result in any significant alterations for site owners.

- The Google Favicon relied on the Googlebot-Image and Googlebot robots.txt user agent tokens, which are still supported.

The update in the documentation highlights the importance of allowing Googlebot and Googlebot-Image for favicon crawling to ensure your site’s favicons appear in Google Search results.

If you encounter issues with favicon display in search results, it’s advisable to review the updated developer documents on this topic and ensure that you haven’t disallowed Googlebot and Googlebot-Image in your robots.txt directives.

Keep up-to-date with our dedicated algorithm and search industry round-ups. For any further information about these posts – or to learn how we can support your SEO – get in touch today.