Accurately measuring the effectiveness of a media channel or campaign is a common challenge in digital marketing. We’re always seeking ways to accurately measure impact and assign credit to media channels to ensure we understand what performs best and the impact our channels have.

- The challenges of measuring media effectiveness?

- What is incrementality testing?

- Why would you conduct incrementality testing?

- Quantifies direct media effectiveness

- Calibrates your media mix model

- Validates marketing strategies

- Provides easily interpretable results

- Example of incrementality testing results

- Advantages of incrementality testing

- Casual Impact

- Avoids potential bias due to group differences

- Data-driven media planning

- Uses advanced, up-to-date software

- Iterative improvement in the modelling process

- Iterative improvement in the media planning process

- Limitations of incrementality testing

- Data collection

- Selection of control groups

The challenges of measuring media effectiveness

One of the most frequently used methods to do this in online advertising is multi-touch attribution modelling. Multi-touch attribution uses “touch-points” throughout the customer journey to model and assign credit to various media channels. Multi-touch attribution models can only assign and model credit based on the data collected.

With the decaying lifetime of third-party cookies and the ever-increasing use of multiple devices and mobile in-app browsers, measuring the customer journey becomes increasingly difficult. Incrementality testing, on the other hand, goes above and beyond attribution models, helping you understand the causal impact of specific marketing efforts and avoiding misattribution of success.

Another frequent challenge faced by marketers is the accurate measurement of long-term media effects. Incrementality testing helps strengthen understanding of both short-term and long-term media effects through conducting these tests using geographical location, in which a media channel is active in the “treatment” location only. The time in which it takes for the impact to become significant is called the delayed effect, sometimes referred to as the Adstock effect. Alternatively, deactivating a media channel in the treatment location only and observing the time taken to see a significant decaying impact can provide useful insights into the carryover effects, sometimes referred to as the saturation, of that particular media channel. These insights are crucial for developing a reliable media plan that contributes to long-term business growth.

Having a strong understanding of media impact, delayed effects and carryover effects is vital for improving media planning and in turn, increasing overall business performance.

This is where incrementality testing comes into its element!

What is incrementality testing?

From a media perspective, incrementality testing is a statistical method used to measure and estimate the direct impact, cumulative impact, and short/long-term effects of a campaign or particular media channel.

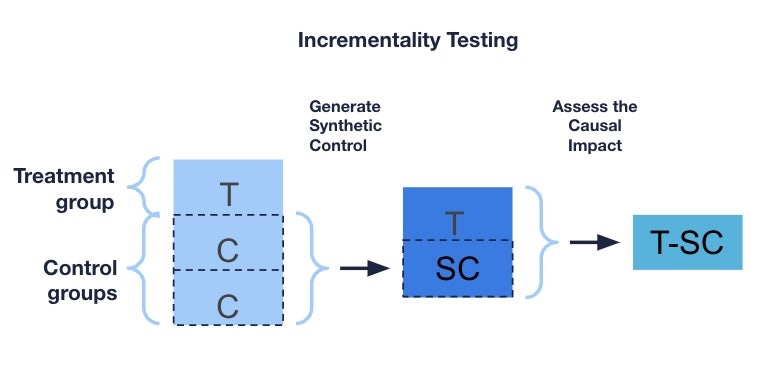

Incrementality testing aims to establish a causal relationship by comparing a “treatment” with a “control” group. The treatment group refers to a group exposed to the marketing experiment and the control group refers to a group that hasn’t been affected. Incrementality testing can be done using various methods depending on the context of the test. Incrementality testing provides ways to quantify media impact using data and through the separation of any efforts directly from the media channel with other external factors.

As mentioned previously, incremental testing can be conducted using geographical location. We call these types of tests geo-experiments. A geo-experiment aims to measure and assess variations in media impact across areas or regions. While incrementality testing is a broader concept, geo-experiments represent a specific type of incrementality testing focused on geographic variations. In other words, geo-experiments are an element of incrementality testing, and the principles of incrementality testing can be applied to understand the causal impact of marketing interventions, whether they are geo-specific or not (see an example of where we conducted geo-experiments with our client Topps Tiles here).

Here at Impression, we perform incrementality testing using a synthetic control group. A synthetic control group serves as an artificial comparison for the treatment group. The synthetic data is generated by a weighted sum of the geo-segments around it to account for any slight regional differences. This approach to incrementality testing also helps to account for confounding variables and external factors, providing a more accurate estimation of the media impact.

We utilise the Bayesian framework to derive a synthetic control group. This allows us to measure and account for any uncertainty we may have on the resulting impact and in the media planning process. For example, say the incrementality test estimates that turning on a particular media channel causes a cumulative increase in revenue of £1000, Bayesian models enable us to attach an interval of greatest probability to this point estimate, hence allowing for a greater understanding throughout the media planning process.

Formally, Bayesian models use a combination of prior knowledge and the data to produce what’s called the posterior distribution. It is this posterior distribution that contains the point estimates and the corresponding uncertainties. To derive a synthetic control group, we elicit non-informative priors, and hence let the data do the talking!

To perform incrementality tests this way, approximately 2-3 years of clean, well-formatted historical time series data is required. Within this data, there must be the treatment group, time of treatment, and numerous control groups concerning similar campaigns or locations, which we wish to use to approximate a synthetic control group.

Provided we have this data, a weighted sum of the similar control groups is taken to derive the synthetic one to be used for testing. It is these weights that are modelled under a Bayesian framework, and hence uncertainties associated with the synthetic control are produced to inform the media planning process. As a result, the differences between the observed treatment, and the synthetic controls are recorded to produce the causal media impact and their associated uncertainties.

Why would you conduct incrementality testing?

Quantifies direct media effectiveness

The difference between the synthetic control and the treatment observations gives the estimated media impact and any uncertainty. This impact can be used to provide useful insights into the media planning process and for comparison with other media channels. Incrementality testing helps determine which advertising channels contribute most effectively to business performance.

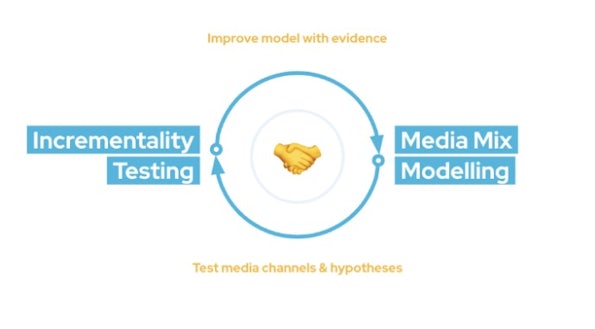

Calibrates your media mix model

Media impact estimates can be used to fine-tune your media mix model to give a better representation of your media mix, and hence to inform your budget allocation forecasts.

Validates marketing strategies

Incrementality testing allows us to validate how well a current marketing strategy works and whether it needs updating. For instance, if a new media channel is found to have a significant impact through incrementality testing, then a budget should be allocated for this channel in the next marketing strategy.

Provides easily interpretable results

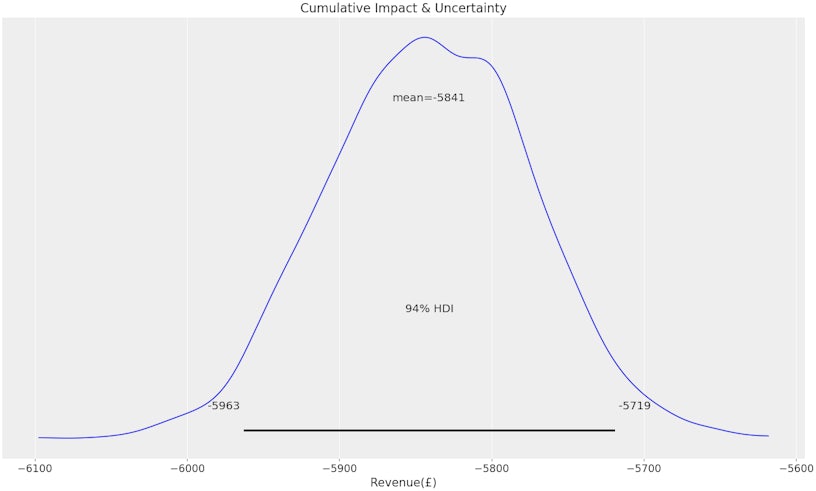

Cumulative impact translates directly into the dependent variable, meaning that if you’re measuring revenue, the cumulative impact tells you how much revenue you’ve gained/lost for a given time point plus any uncertainty you may have.

Example of incrementality testing results

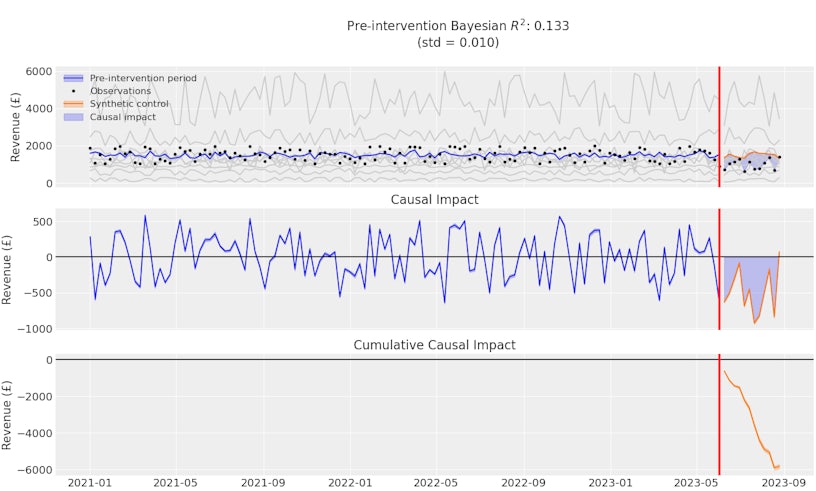

The “pre-intervention Bayesian R2” represents a measure of model fit – in this case, as it is close to zero, the model is a very poor fit!

The top graph shows both pre and post-treatment periods of revenue over time. The grey lines represent the control groups used to derive the synthetic control, and the red vertical lines represent the treatment/intervention period.

The middle graph shows the direct causal impact, that is the treatment observations subtracted from the synthetic control. The blue-shaded area represents the causal impact.

The bottom graph shows the cumulative causal impact, that is what happens to the dependent variable during the post-intervention period. Here we can see that approximately £6000 in revenue has been lost as a result of the treatment. Additionally, we can see that the treatment effects are immediate due to an instant decline in cumulative impact from the point of treatment.

Based on this graph, we can see that on average, approximately £5841 in revenue has been lost as a result of the treatment. However, there is a 94% probability that the exact amount lost in revenue is at least £5719 and at most £5963.

Advantages of incrementality testing

Casual Impact

Constructing a synthetic control group to mimic the treatment group before the treatment helps to isolate the media impact in the absence of any confounding variables.

Avoids potential bias due to group differences

By using a synthetic control, we eliminate any possible errors or biases due to individual differences within the control and treatment.

Data-driven media planning

Incrementality testing enables you to quantify the impact generated by a campaign or media channel and use this to inform your marketing strategy.

Uses advanced, up-to-date software

Here at Impression, we use the most up-to-date and statistically advanced software packages to aid in the modelling process.

Iterative improvement in the modelling process

Once an initial synthetic control group has been derived, we can assess which control groups contribute towards its derivation. Through model comparison techniques we can derive an optimal synthetic control group that best captures the treatment group in the absence of the treatment.

Iterative improvement in the media planning process

Once one incrementality test has been conducted, we gain insights into which control groups create a good synthetic control group and how long a test should typically run to see a significant impact. These insights can aid in the modelling process of other incrementality tests of the same or similar nature.

Limitations of incrementality testing

Data collection

Typically 2-3 years of data is required to create an accurate synthetic control group. Additionally, the data must be of good quality as incomplete or inconsistent data can limit the accuracy of the results.

Selection of control groups

If the control groups used to generate the synthetic control group don’t adequately capture the potential outcomes of the treatment group in the absence of the treatment, the synthetic control may become inaccurate.

Overall, Incrementality testing is a valuable tool that allows marketers to gather insights into media planning. Through data analysis and the understanding of how different media channels impact KPIs in the absence of any confounding variables, businesses can make informed decisions on marketing strategy.

If you’re looking to improve media planning and overall business performance, based on data-driven statistical evidence, incrementality testing is crucial. Get in touch with one of our media solutions experts if you have any questions or need assistance with incrementality testing. Alternatively, explore our media planning services page to learn more about how we can help.