| A summary of main points |

|---|

| Generative engine optimisation (GEO) prioritises being cited by AI over traditional ranking. Instead of just aiming for top search results, GEO focuses on making your content interpretable and trustworthy enough for Large Language Models (LLMs) to use it as a direct source in their answers. |

| This discipline is an evolution of SEO, requiring both existing tactics and new tweaks. While good SEO fundamentals (like high-quality content and E-E-A-T) are still essential, GEO demands added emphasis on clarity, content structured for summarisation, a question and answer format, and, critically, earning brand citations. |

| Brand citations and consistent online presence are crucial for AI visibility. LLMs favour trusted sources. Your brand’s likelihood of being cited heavily depends on quality backlinks, positive press coverage, clearly structured factual content and maintaining a consistent brand message across all your online platforms. |

- Firstly, what is generative engine optimisation?

- Why are people moving towards AI engines for answers?

- Because they can ask questions like a human

- Because they get synthesised answers

- GEO vs SEO: Is there really that much of a difference?

- The main nuances between GEO and SEO

- SEO tactics still very much matter in a GEO world

- Overall general advice on how to succeed in GEO

- 1. Publish authoritative content

- 2. Optimise for clarity, not just clicks

- 3. Think in questions and answers

- 4. Stay human

- 5. Prioritise information gain

- 6. Above all, brand citations and mentions

- If citations of my brand are one of the most important factors for success, how do I know what influences that?

- 1. Whether your brand is linked to credible sources

- What matters

- 2. Your press coverage

- Effective Digital PR helps you

- 3. The structure of your content

- Make it easy for the machine

- 4. Your brand is consistent across the internet

- Tips for consistency

- Here’s a snippet example of how to optimise for GEO

- GEO additions

- Are there nuances for how to optimise for LLMs across ecommerce, B2B and local?

- 1. Ecommerce

- Key nuances

- 2. B2B

- Key nuances

- 3. Local

- Key nuances

- How to track GEO success

- Key metrics to track in LLMs

- How LLM tracking differs from SEO

- Tracking ccross multiple LLMs

- Referral traffic

- Clicks vs. Impressions

- Final thoughts - your GEO checklist

Firstly, what is generative engine optimisation?

Generative engine optimisation (GEO) is the art of optimising your content so large language models (LLMs) can interpret, trust and cite it. While traditional SEO is heavily focused on ranking, GEO is more about resonance.

Right now, AI search engines aren’t dominating traffic, with Google still holding a significant lead. However, evidence points to LLMs gaining an increasing share of the market, and we’re already seeing brands that we work with making sales directly from users searching on these AI engines.

What makes this traffic so valuable is that it’s often high-intent, leading to higher conversion rates than standard organic traffic. This is happening for a few key reasons: people trust chatbots and perceive their recommendations as more personalised, and the conversational nature of the interaction makes users feel more invested in the outcome than they would after a quick Google search.

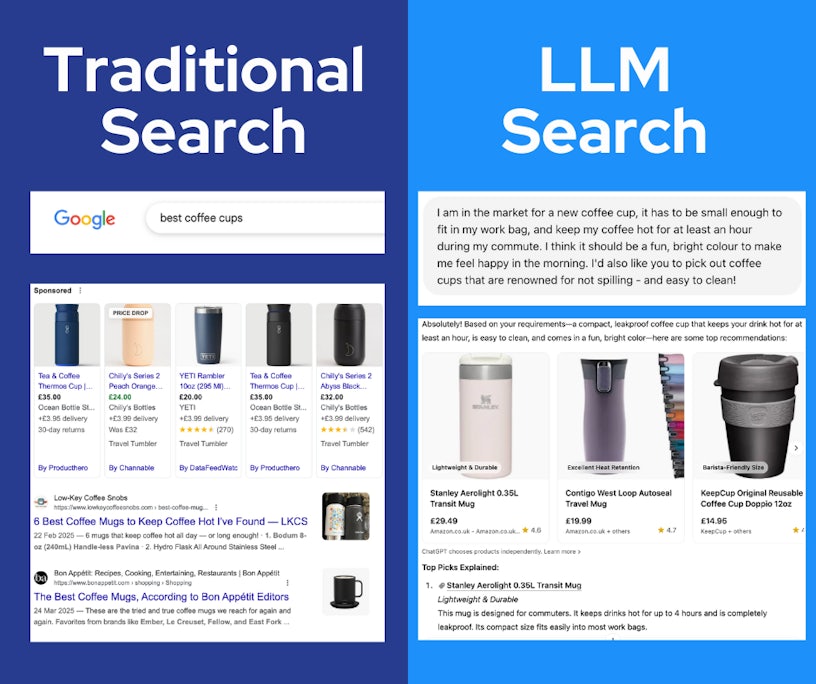

Why are people moving towards AI engines for answers?

Because they can ask questions like a human

‘Best affordable ergonomic desk chair UK 2025’ isn’t how humans talk.

Traditional search engines require us to translate our natural questions into search engine language for many queries – a string of keywords that can sometimes feel clunky and unnatural.

Particularly for longer tail queries, we often have to guess what terms the search engine would understand best, rather than just asking the question directly. LLMs, on the other hand, understand and respond to natural language, making the interaction feel much more human and intuitive, like having a real assistant.

Because they get synthesised answers

Traditional search presents a list of potential answers in the form of search results, leaving the work of sifting through them to the user. LLMs aim to give you the direct answer or a concise summary upfront, saving you valuable time and the task of hopping between multiple websites to piece together one piece of information.

Although the advent of AI overviews in traditional search has aided in synthesising and summarising chunks of information for users, these tend not to be as direct as generative engines, and are still (notoriously) subject to getting things wrong. Although the same rings true in that LLMs can hallucinate, many users prefer searching in a less ‘busy’ place with a more personalised experience.

GEO vs SEO: Is there really that much of a difference?

Yes… and no.

Both SEO and GEO demand structure, authority and visibility. But where SEO rewards relevance for a query, GEO rewards understandability and the ease with which your content can be summarised.

The core difference is that GEO is about being used as a source. Your content becomes part of someone else’s answer. Need support for GEO? Check out our Generative Engine Optimisation services here.

The main nuances between GEO and SEO

| Feature | SEO (Traditional Search) | GEO (Generative Search) |

|---|---|---|

| Goal | Rank at the top of SERPs | Be the answer in AI chats |

| Tactics | Keywords, backlinks, metadata | Clarity, authority, citations |

| Optimisation Focus | Web pages | Web presence |

| Success Metric | Click-throughs | Citations and mentions |

| Output | Links (results) | Summaries and direct answers |

| Content Format | Titles, headers, structured text | Factual, semantically rich content |

We’ll delve into this in more detail later on, but for now, it’s essential to remember that the work we already do to increase our visibility in search engines remains just as important, as it still relates to earning visibility in generative search.

While there are definitely nuances in approaches to increasing visibility between SEO and GEO, there are also lots of similarities. Lily Ray and numerous other industry studies reveal a significant overlap between sites that perform well in SEO and those most frequently cited in AI answers.

Think of GEO as an evolution, not a revolution. Good SEO is a prerequisite for good GEO. Don’t scrap the strategy – tweak it.

SEO tactics still very much matter in a GEO world

There’s plenty we know to do as SEOs that can directly translate into GEO success, such as:

| SEO Tactic | Why It Still Matters in GEO |

|---|---|

| High-quality content | LLMs love thorough, factual information |

| E-E-A-T | Signals authority, trust and expertise |

| Internal linking | Aids context and relationship mapping |

| Mobile optimisation | Ensures visibility and crawlability |

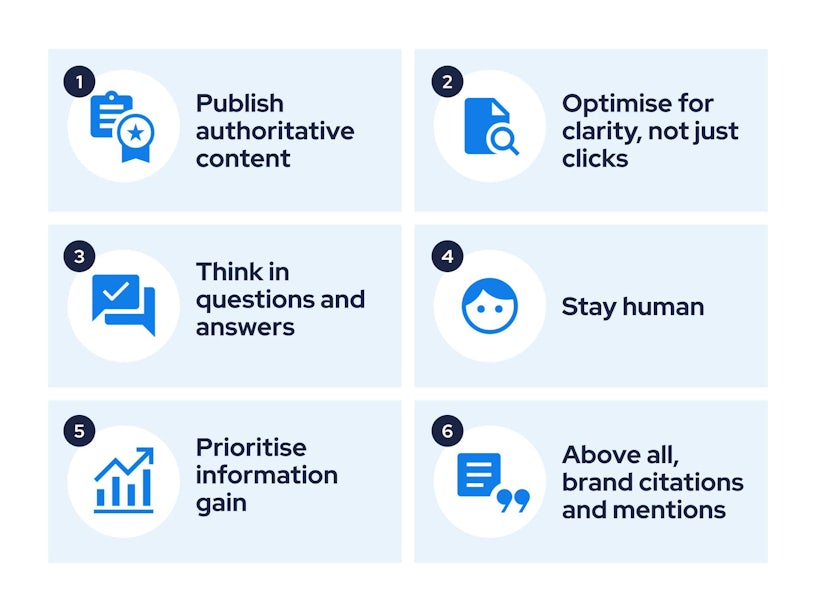

Overall general advice on how to succeed in GEO

We’ve seen the similarities and differences between SEO and GEO. So, aside from continuing to nail what we already know with our SEO caps on, what is the general advice on ‘tweaks’ that are useful to keep in mind?

| Insight 💡 |

|---|

| An LLM’s core knowledge is limited by its training data cutoff date; however, modern AI search engines are overcoming this by using a technique called Retrieval-Augmented Generation (RAG). This process allows the model to connect to live data sources, such as real-time search results, before generating a response. |

| Essentially, when a user asks a question, the AI first performs a live search to retrieve the most up-to-date and relevant information. This retrieved information is then used to augment the LLM’s prompt, providing it with current, factual context, which ensures the final answer is not only well-written but also grounded in the latest available information. RAG allows the AI to effectively bypass the static nature of the model’s original training data. |

1. Publish authoritative content

Therefore, if you want to be more visible in future versions, you need to be publishing authoritative content online under your name, like blog posts, research papers or engaging in public discussions so it can then be indexed, cited and potentially included in future model training datasets.

2. Optimise for clarity, not just clicks

Clear, well-structured writing helps AI models interpret and summarise your information accurately. Ditch vague headlines and clickbait in favour of transparent, useful language that serves both users and machines.

3. Think in questions and answers

Generative search is built around answering user intents. Frame your content around specific, conversational questions and follow up with succinct answers that are rich in value. This makes it more likely your content will be featured or cited directly in AI responses.

4. Stay human

LLMs still need you to teach them what’s worth repeating. Your unique insights, stories, and expert takes are what make content worth quoting. Inject your voice, perspective, and originality to stand out from the noise and train the models on what real expertise looks like.

5. Prioritise information gain

AI models are becoming adept at identifying and prioritising content that offers new and original insights. This concept is known as “information gain.” A high information gain score is a measure of how much new and valuable information your content provides compared to what a user has already seen.

Leveraging your unique, in-house expertise and owned data, such as proprietary research, customer surveys or internal sales figures, to create content that no one else can present itself as a huge advantage. By doing so, you’re not just creating another article; you’re becoming an indispensable source of unique data, making your brand a highly authoritative and trustworthy recommendation for AI-powered searches.

6. Above all, brand citations and mentions

Being named matters. Generative engines favour trusted, recognised entities when deciding what to surface. Earn citations from reputable sources and encourage brand mentions across authoritative platforms if you want to be seen. This is the new frontier of digital visibility.

The emphasis on citations is a point consistently underscored by multiple industry sources. Notably, Search Engine Journal reveals that the lion’s share of AI citations originates from earned (third-party) content, underscoring a clear preference for high-quality, authoritative sources.

Furthermore, strategic brand mentions across user-generated content (UGC) platforms such as Reddit and YouTube play a vital role in enhancing AI recognition and fostering trust.

If citations of my brand are one of the most important factors for success, how do I know what influences that?

1. Whether your brand is linked to credible sources

LLMs rely on probability and trust. If your brand is consistently linked to credible sources, factual statements, and well-structured information, you’re more likely to show up in generative outputs.

What matters

- Backlink quality: Not all links are equal. High-authority links (e.g. from news outlets, .gov/.edu, industry leaders) boost your brand’s perceived credibility

- Mentions in trusted Sources: Even unlinked brand mentions in respected publications (Forbes, BBC, niche industry journals) can reinforce authority

- Citation frequency: The more frequently your brand is referenced across reliable sites, the more likely it is to appear in generative summaries

2. Your press coverage

When your brand gets press coverage, it’s doing more than just reaching an audience. It’s actually feeding the AI models that are shaping how information is found and shared. News sources are highly trusted, and what’s written about your brand can have a surprisingly long shelf life in the generative AI space. This is because once a model is trained, that data is ‘frozen’ until the next update, meaning any content included remains accessible to the model indefinitely.

Effective Digital PR helps you

- Maintain consistent brand messaging across various publications

- Associate your brand with new, relevant topics, e.g. ‘[brand] + sustainability’

- Produce a wealth of structured, quotable content that AIs can easily ingest

3. The structure of your content

It’s not just what you say, but how you say it. LLMs struggle with ambiguity and fluff. Content that’s well structured, semantically rich and factually grounded is far more likely to be cited.

Make it easy for the machine

- Use bullet points, FAQs and summary boxes

- Include clear citations for facts, stats and external claims

- Avoid over-reliance on visual content – LLMs prioritise text

4. Your brand is consistent across the internet

LLMs are diligent in that they read everything, but they’re wary of contradictions. If your brand is described ten different ways across the web, it becomes harder to trust and harder to cite. This is where it becomes vital to align Digital PR, SEO, and content teams around consistent messaging.

Tips for consistency

- Use the same ‘blueprint’ or key messaging across your owned content and third-party placements

- Regularly audit how your brand appears in AI tools by manually asking ChatGPT, Perplexity, Gemini, etc. Nik Ranger provides a brilliant strategy on auditing your brand in generative search and how to use this information to inform changes

- Update old content to align with your current positioning

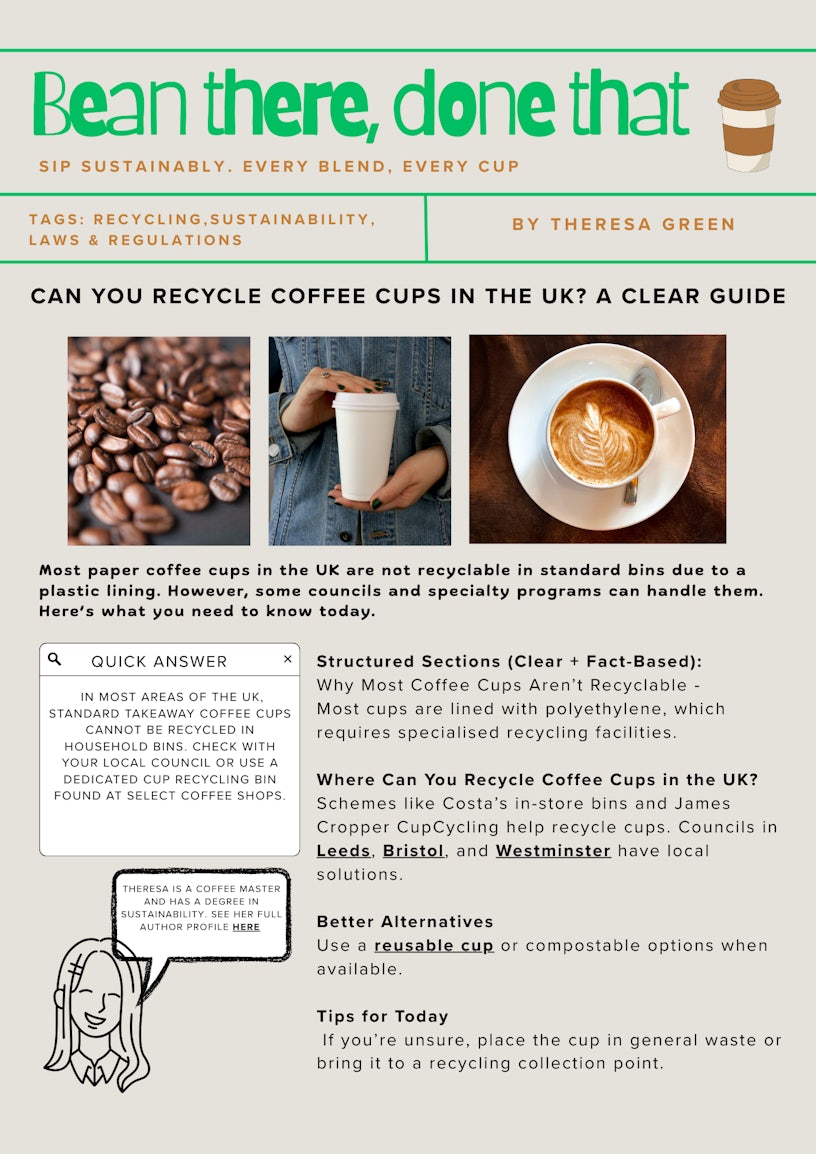

Here’s a snippet example of how to optimise for GEO

In this short example, we’re focusing on optimising an ecommerce page with GEO in mind. Remember that this will slightly differ from B2B or local pages, which we’ll get to later.

- Conversational H1: Can You Recycle Coffee Cups in the UK? A Clear Guide

- Quick answer box format (top of page): Most paper coffee cups used in the UK cannot be recycled in standard household bins due to their plastic lining. While they look recyclable, most are coated with polyethene, which makes them waterproof but difficult to process in regular recycling systems.

- Main body: A 2023 report from WRAP (Waste & Resources Action Programme) estimated that fewer than 1 in 400 coffee cups are actually recycled correctly. That’s because only a handful of facilities – like James Cropper’s CupCycling plant – can separate the plastic and paper layers effectively. If you’re unsure whether a cup is recyclable, it’s safest to put it in general waste, or check with your local council recycling service.

- Better alternatives section:

Reusable cups: Most UK coffee chains now offer discounts for bringing your own cup. Brands like KeepCup and Stojo are popular, barista-friendly options.

Compostable Cups: Look for cups certified to EN13432 or TUV OK compost standards. These break down in industrial composting facilities, though they still need correct disposal.

Recyclable-Lined Cups: Some newer paper cups use water-based or plant-based linings (e.g. Frugalpac), which are accepted in standard paper recycling at select facilities.

Cup Return Schemes: Chains like Starbucks and Costa now offer return-and-reuse schemes where used cups are collected and processed centrally.

GEO additions

You may notice that this way of optimising doesn’t differ too greatly from how we’d optimise for traditional search, but here’s what we made sure we covered:

- Bold quick answer box → helps LLMs extract concise, citable responses

- Stats with citations → adds authority and citation-ready content

- Named entities (James Cropper, EN13432) → boosts recognition

- Clear headings and chunked information, such as the ‘better alternatives’ section → easier for LLMs to summarise

- Conversational tone and question format title → mirrors how users query within AI tools

- Link to source/mention of local council → encourages LLMs to cite or connect your content to factual localised information

- This page would also have structured data markup (faq, howto, localbusiness) → to allow the LLM to see additional context

Are there nuances for how to optimise for LLMs across ecommerce, B2B and local?

The overall general advice stays the same, but yes, there are definitely some differences. Here’s what we recommend for these scenarios.

1. Ecommerce

Goal: For LLMs to accurately describe your products, compare them to competitors and answer common shopping questions such as ‘what is the best travel mug’.

Key nuances

- Hyper-specific product descriptions: Go beyond basic features. Detail benefits, use cases, target audience and unique selling propositions. Think like a helpful sales assistant.

- Structured data: Use Product, Offer, Review, AggregateRating, Brand and Availability schema properly. This tells LLMs exactly what your product is, its price, ratings and if it’s in stock. Microsoft has confirmed it uses schema markup to help its LLMs.

- FAQs for products: Each product page should have an FAQ section that directly answers common questions about that specific item, e.g. ‘Is this waterproof?’ and ‘What size battery does it need?’

- Comparison content: Create ‘product A vs product B’ content and ‘Best x for x content’. This gives LLMs pre-digested comparison data that they can provide to users.

- User reviews & Q&A: Encourage and display detailed customer reviews. LLMs often pull insights from these.

- Focus on facts: LLMs are trained to detect and often disregard overly promotional or subjective language. Stick to verifiable facts, features and objective benefits.

2. B2B

Goal: For LLMs to understand your industry expertise, solve specific business problems, identify your solutions and recommend your services/software to other businesses.

Key nuances

- Thought leadership and problem/solution content: B2B buyers are looking for solutions to complex problems. Create in-depth whitepapers, case studies, long-form blog posts and webinars that address industry challenges and position your offerings as solutions.

- Define your niche and ideal customer: Be very clear about who you serve and what specific problems you solve. LLMs are good at matching queries like ‘FMS for small law firms’ or ‘cloud solutions for HR data’.

- Technical deep dives and explanations: For software or complex services, provide detailed documentation, API guides, and’ how-to’ articles. LLMs can explain technical concepts, drawing from your content.

- Case studies with measurable results: LLMs can extract quantifiable outcomes. “Company X reduced costs by 30% using our ERP software” is prime LLM fodder.

- Expert author bios and E-E-A-T: Emphasise the expertise of your content creators. Strong author bios (with relevant credentials) on your blog and whitepapers signal authority to LLMs.

- Glossaries and definitions: Create comprehensive glossaries of industry terms. This helps LLMs understand jargon and define terms for users.

- Consistent entity recognition: Ensure your company name, product names and key personnel are consistently represented and linked across your digital footprint (website, LinkedIn, industry directories, Crunchbase).

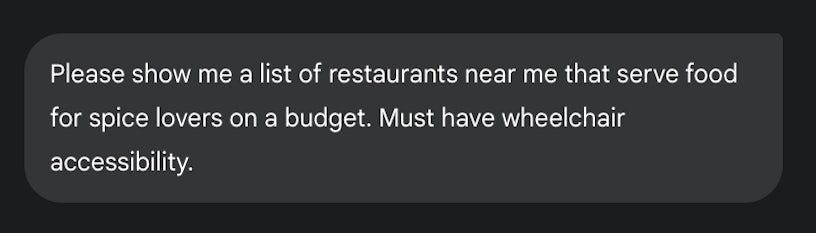

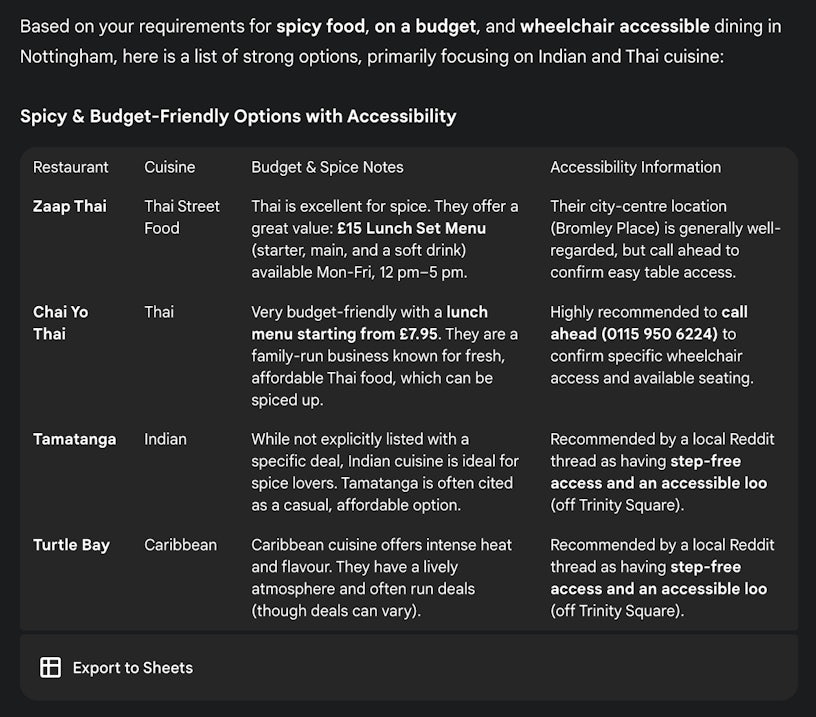

3. Local

LLM Goal: For LLMs to recommend your business for local searches (e.g. ‘best coffee shop in Nottingham’, ‘plumber near me’), provide accurate business information – including hours, address, phone number and summarise customer reviews.

Key nuances

- Google Business Profile (GBP) is paramount: This is arguably the most critical factor. Optimise your GBP with accurate hours, services, photos, and categories. LLMs frequently pull directly from this data.

- Local structured data: Implement LocalBusiness schema on your website. This includes your Name, Address, Phone number, opening hours, services offered and accepted payment methods.

- Consistency across the web: Ensure your business name, address, and phone number are identical across your website, GBP, Yelp, Facebook and any other local directories. Inconsistencies confuse LLMs.

- Accumulate & respond to reviews: LLMs factor in review sentiment and common themes. Encourage reviews on Google, Yelp, TripAdvisor, etc., and respond to them.

- Service area clarity: If you’re a service-area business (e.g., plumber, electrician), clearly list the specific areas you serve on your website.

- Local content: Create blog posts or pages that address local needs or events (e.g., “Best dog parks in [city]”, “Things to do in [area]”, “Our guide to [local event]”).

- Q&A sections about local services: Answer hyper-local questions on your site or GBP Q&A, like “Do you offer emergency plumbing in [specific suburb]?”

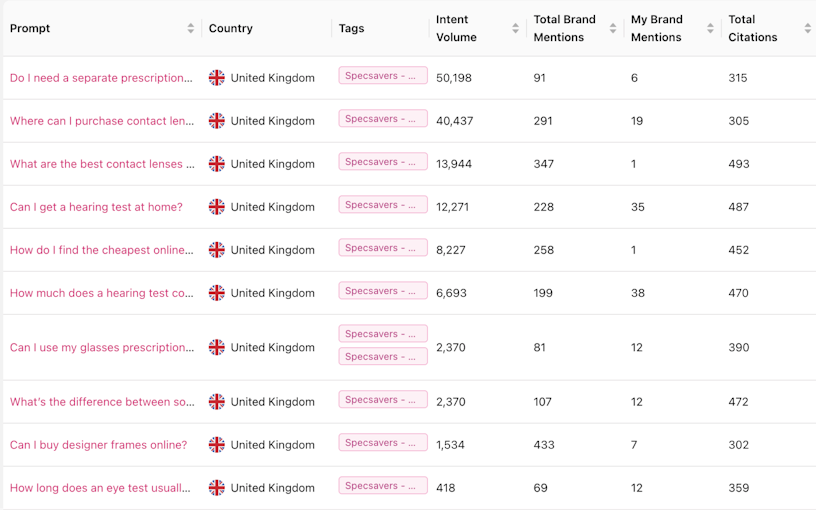

How to track GEO success

When it comes to tracking how successfully your brand is performing in AI search, you’re no longer just looking at organic rankings. You’re focused on how AI models and LLMs use and cite your content.

To truly understand your GEO performance, you need to focus on a few core areas. First, your content’s visibility in AI responses, user engagement after AI referrals and your brand’s authority and quality in the eyes of AI.

Key metrics to track in LLMs

There are a few key metrics any business will want to measure when tracking performance in LLMs:

- Brand mentions – How often is your business featured in the response to a prompt?

- Citations – Is your business referenced as a source when an answer is returned?

- Sentiment – What do LLMs say about your brand when it appears in a response?

How LLM tracking differs from SEO

LLM tracking works differently from traditional SEO. In search engines, users typically type just a couple of words per search, while LLM queries are much more conversational. For example:

- Search engine query: “Contact lenses online”

- LLM prompts:

- “Where can I buy high-quality contact lenses online with fast delivery?”

- “What are the best websites to order prescription contact lenses online?”

- “How do I choose the most reliable online retailer for contact lenses?”

- “Which online stores offer discounts and deals on contact lenses?”

- “Are there online services that help refill my contact lenses prescription easily?”

- “Where can I buy high-quality contact lenses online with fast delivery?”

This change in behaviour is a challenge for SEO. There’s no longer a list of the top 20 “money” keywords to focus on. Instead, businesses need to build a large set of prompts around a topic and track them as a proxy for visibility. Using the example above, if we understood where a business ranked across these prompts, we’d have a clearer picture of overall LLM performance.

Tracking ccross multiple LLMs

It’s also important to measure performance across different LLMs. While ChatGPT is currently the leader, other players like Perplexity and Claude are gaining traction. A robust tracking setup needs to cover all of them.

Right now, we use Otterly.ai, which allows us to track performance across ChatGPT, Perplexity, Claude, and other AI-driven platforms.

Referral traffic

The most direct way to measure LLM traffic is through referrals in GA4. This shows the clicks generated when someone visits your site directly from an LLM.

We’re finding that traffic from LLMs is usually high intent and converts at a strong rate compared to other channels. That said, it still makes up only about 1% of overall traffic – so it’s early days to invest heavily in complex tracking setups.

Clicks vs. Impressions

As LLMs become more widely used, we’ll see fewer clicks across the web as more “zero-click” searches happen. As an industry, we need to adapt to this shift and place more value on the impressions our brands earn inside LLM responses, even when they don’t drive immediate clicks.

Final thoughts – your GEO checklist

- Optimise for clarity, not just clicks

- Audit how LLMs describe your brand

- Track AI mentions, not just traffic

- Think in questions and answers

- Stay human. LLMs still need you to teach them what’s worth repeating

Optimising content to drive visibility in AI answers is an evolution of SEO, and an extension of the work we have already been doing. Keep being great SEOs, and keep GEO in mind during this.

Impression can assist with ensuring your success in the AI landscape as it evolves, as well as reporting on success and combining this knowledge with our SEO backgrounds to create dynamic strategies. Find out how we can support you as your GEO agency by positioning your brand as an authority to earn frequent mentions within LLMs.