Throughout September, the industry as a whole has seen many updates across the board, including broad algorithm updates. The most discussed of all of them would be the September Broad Core Algorithm update which rolled out on the 24th.

As well as this, far more industry updated were announced across the month, including: Google’s announcement of the September Core Algorithm Update, Google changing the way they handle link attributes, Google Search Console introducing more updated data, new rules for rich review snippets and many more.

September Core Algorithm Update

Google pre-announced on Twitter on the 24th September 2019 that the Core Update would start to roll out within the next couple of hours, which can take up to a couple of days. Google stated during the June’s Algorithm Update they will be pre-announcing any core algorithm updates to the community, in order to help those in the industry be more proactive and understand why fluctuations may be occurring. By doing this, they are able to officially name the update to avoid any confusion with the industry, presented in March’s Florida 2 Core Algorithm Update.

Later today, we are releasing a broad core algorithm update, as we do several times per year. It is called the September 2019 Core Update. Our guidance about such updates remains as we’ve covered before. Please see this blog for more about that: https://t.co/e5ZQUAlt0G

— Google SearchLiaison (@searchliaison) September 24, 2019

As always, Google informs us that we do not need to act on anything as such and there is likely to be some fluctuations with rankings during this period. However, as to avoid marginal drops when Google assesses your content during these Broad Algorithm Updates, they recommend following the E-A-T guidelines and how relevant the content is for users.

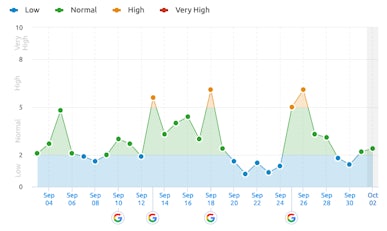

On the 27th September, when we would expect the Algorithm Update to be finishing up, we monitored the impact and analysis what our peers within the community have also reported. Across the industry, it appears that the September 2019 Core Update was not as ‘strong’ as previous core updates. This is analysed throughout when comparing volatility amongst different sectors. It appears that the YMYL (Your Money Your Life) was affected the most. Below, SEMrush Sensor has monitored the volatility within the UK against the Google Algorithm Updates.

To note: Interestingly fluctuations were also reported within the travel sector (particularly in Germany and Spain), which is likely to be a knock-on effect of the closure of Thomas Cook). The unfortunate nature compulsory liquidation of the travel company host-wide redirected their URLs towards an administration homepage.

Further Industry Updates in September

Unconfirmed Google Algorithmic Updates

Across the 5th, 13th and 16th of September the industry commented on various unconfirmed Google Updates due to erratic fluctuations within the SERPs. Many of these webmasters saw huge drops in traffic – some up to 70%! Changes were recorded across a number of online organic search tracking tools and even continued across the weekend. However, Google refrained from confirming anything, but many were suspicious that we were due to another Core Update.

Nofollow, Sponsored & UGC Links

On the 10th of September 2019, Google announced that they will be altering the way they handle link attributes from March 2020. This was brought to many Digital PR’s and SEO’s attention to identify the nature of links, in an updated way. By altering this, this helps to combat spammy content and provide users with the most relevant content for their search query.

Read further around this from our Head of Digital PR’s, Laura’s, extensive roundup here.

Breadcrumb structured data

On September 19, Google announced that breadcrumb structured data reports would now be available via Google Search Console (GSC), underneath the ‘Enhancements’ section. This sent webmasters an alert email if this was causing an issue for the site, as it does with accompanying features on GSC.

Breadcrumbs are important for a website’s page position due to leaving a ‘trail’ indicating the landing page’s hierarchy amongst the architecture of the website. This is a beneficial solution for both the user and crawlers to navigate their way around your site. Google uses breadcrumb markup within the body of a webpage to classify information from the landing page.

Google Search Console provides ‘fresher’ data

Towards the end of September (23rd), GSC reported that they have updated their datasets from six hours prior. This was a significant update, due to previously we’d have to work with 3 day old data sets. But why is this helpful? Gaining data from an earlier source helps us to fix issues and understand the true performance of a website, particularly after a busy period for the business or the impact after fixing an issue.

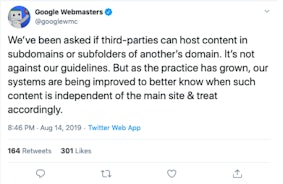

Google begins tackling subdomain and subfolder leasing

On 29th August, Google took action against sections of sites that were being leased out by penalising their potential to rank organically. Google had warned sites in early August that the practice, while not against their guidelines, would be viewed as separate content to the rest of the site, thereby hinting that the leased sections would no longer benefit from the overall domain authority.

On 29th August, Google took action against sections of sites that were being leased out by penalising their potential to rank organically. Google had warned sites in early August that the practice, while not against their guidelines, would be viewed as separate content to the rest of the site, thereby hinting that the leased sections would no longer benefit from the overall domain authority.

True to its word, the search engine giant has since started tackling sites with leased sections, and several webmasters have reported noticeable drops in organic performance.

Google outlines new rules for review rich results

After months of speculation, it appears that Google has now tightened the rules around when a review rich result can be shown in the SERPs. Several SERP tracking tools have reported a marked drop in search results with these rich results, with some tools reporting a 5% drop in SERP presence.

Google has become stricter regarding the rules that dictate when a page is eligible for schema markup to be used in its search results. Google has revealed that it will only show rich results for:

- A clear set of schema types for review snippets

- Non “self-serving” reviews

- Reviews that have the name property within the markup

If your website has review schema implemented, our advice is to review how Google presents your pages in the search results and monitor the CTR of your website and your competitors.

Google releases new snippet settings for search listing flexibility

Google released information around its new snippet settings on 24th September. The new settings, which can be used either as a set of robots meta tags or an HTML attribute, will give webmasters greater control over how Google displays their listings.

The four meta tags which can be added are:

- “nosnippet”: – A preexisting option that blocks the search engine from using any content on the page to be used in textual snippets.

- “max-snippet:[number]”: – This new meta tag allows webmasters to specify the maximum length of text that can be used in textual snippets

- .“max-video-preview:[number]”: – This new meta tag allows you to specify the maximum duration in seconds of an animated video preview.

- “max-image-preview:[setting]”: – This new meta tag allows you to specify a maximum size of image preview to be shown. Options include either “none”, “standard”, or “large”.

Google also revealed that the new meta tags could be combined to control multiple criteria at once. For example,

<meta name=”robots” content=”max-snippet:25, max-image-preview:standard”>

Google has announced that results will be updated with these new rules from the end of October, giving webmasters around a month to prepare. The new settings will be also treated as directives rather than hints, meaning it will accept the setting as long as it is implemented correctly.

These new options will not affect overall rankings but will affect how your results will show up in certain rich results. Bing and other search engines have not announced whether they will support these new snippet settings.

Have we missed anything out? Let us know in the comments.

Read further algorithmic updates from the team, or contact the team today.