March was certainly a busy month in the world of SEO. After many webmasters noticed significant ranking fluctuations in February, Google confirmed in March that they had recently implemented a broad core algorithm update. This algorithm updated has become known as Florida 2 due to coinciding with the Pubcon Florida SEO conference, as did the first Florida update in the early 2000s.

Alongside this major update, Google announced that they were no longer using rel=prev/next as an indexing signal. The news took many by surprise, as the search engine had previously recommended its implementation as a way of ensuring paginated series were crawled and indexed appropriately. Google now says that it is smart enough to understand paginated series without the indexing tool, but recommends that SEOs keep the code on their site for other purposes.

Finally, Moz announced the first update to their Domain Authority (DA) algorithm in years. The update created a lot of buzz on Twitter, as SEOs and PRs were initially confused by seeing large drops in their DA score. While Moz’s algorithm is completely separate to that of Google, many webmasters were worried that their site would see a drop in rankings.

Google Algorithm Update March 2019: Florida 2

For the past couple of months within the SEO community, there has been plenty of chatter about keyword positioning and traffic fluctuations within the SERPs. Many online industry forums discussed their early observations of significant increases and decreases and the prediction of a core algorithm update on the horizon due to the sheer adjustments in the SERPs.

It’s no secret that Google often updates its algorithm every single day, sometimes multiple times, but they are mostly undetectable. However, Florida 2 does not compare to these daily updates, so much so that Google officially announced this as a broad core algorithm update, to aid in improving their algorithm.

What is The Florida Google Algorithmic Update?

To understand where Florida 2 initially gained its name, it’s important to know Florida’s history. The initial Florida Google algorithmic update was rolled out on the 16th November 2003. The intent behind this early update was to combat spammy practices occurring on the SERPs at that point in time.

Google was aware that many SEOs were trying to manipulate their algorithm by implementing keywords as external links within the anchor text onsite – resulting in championing position 1. Around this period, many websites were related to e-commerce websites and affiliate links, therefore the Florida algorithm update only penalised those site which were involved in these negative practices.

What is The Florida 2 Algorithm Update?

The Florida 2 or the March Core Algorithm Update has been coined as one of the biggest updates in years. This was rolled out on March 12th 2019 with traffic fluctuation mainly noticed from the 13th and 14th.

Unlike last August’s Medic Algorithm Update, where there was an unconfirmed focus towards health, medical and YMYL (Your Money or Your Life) websites. These sites can have an impact on someones current or future well being. Florida 2 seems to not be targeting a specific area of websites, which suggests that SEO experts should understand how Florida 2 is impacting the Google algorithm. Updates similar to Florida 2 usually focus on rewarded sites with higher quality content and consider user intent, instead solely penalising websites.

What is the Impact of Florida 2?

You may be wondering, well what impact could this have for my clients? Or for my own site? Over 2 weeks on, many changes have been reported, these include but are not limited to;

- Evidence of an increase in traffic towards websites providing high-quality content (E-A-T) and a fast page speed

- Changes in rankings for short term keywords

- Reports of adjustments of Google interpreting certain search queries – potentially leaning more towards intent

- Irregular changes in page rankings and traffic

- The mobile SERPs confirmed to be more volatile, in comparison to desktop

With this in mind, Google’s official search Twitter account tweeted what to expect from this algorithm update –

This information suggests that there is no ultimate “fix” for websites that may have seen negative changes in their organic performance, but to focus on building great and informative content that is user focused.

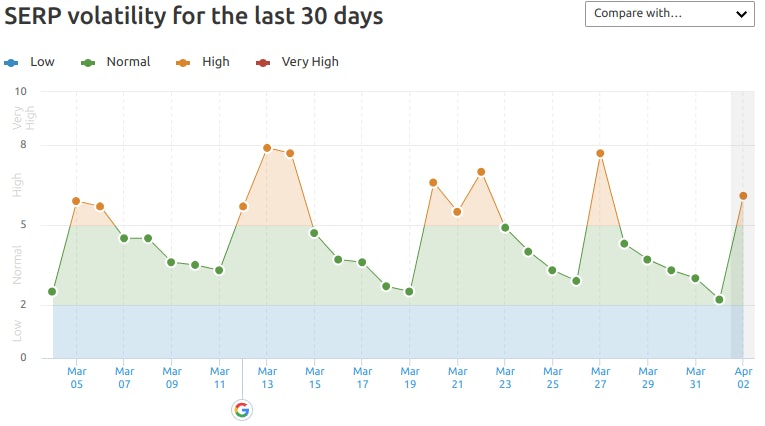

SERP volatility

Whilst reviewing the SERP volatility over the last 30 days for a range of sites, it’s clear that many positions have fluctuated as a result of the Florida 2 update. Inconsistencies across all website categories align with the broad algorithm update not focusing on a specific niche within the industry. It should also be noted that as mobile searches are significantly continuing to grow, the mobile SERPs are ultimately more unstable, as opposed to desktop.

With that been said, whilst analysing specific categories, some categories have been noted to be more volatile than others. These include; arts & entertainment, news and sports, which may suggest that the content on-site may not be valuable to the user, which has caused large tremors within the SERPs after the algorithm update. This has not been confirmed, as the analysis has been conducted from secondary data sets provided by SEMRush. However, conclusions can be made.

How to recover from the Florida 2 impact

If you’ve been hit by the core Google algorithm update and are searching for ways to recover, we’ve collated key ways to compete in the highly volatile SERPS across different sectors, countries and languages.

Focus on Google’s E-A-T quality

The E-A-T guidelines are an acronym for expertise, authoritativeness and trustworthiness should be carefully implemented into every SEO strategy in order to create an informative user experience and encourage search rankings.

By following the E-A-T guidelines, this will aid in providing superior quality content on site that is likely to effectively compete on the SERPs. Not only will this strategy encourage recovery from the Medic and Florida 2 Algorithm updates, but also protect the site from any future core and targeted updates.

Citing the author

By reading into Google’s Quality Guidelines, it is apparent that information provided on sites should clearly cite the author, or provide contact information and customer support details if this is a product or service page. This way, Google is able to assess the expertise of the author and where their information source is trustworthy and reliable to deliver to their users.

This guideline cannot be stressed enough. Previous updates have also wanted to be aware of sources where the information was derived from. From example, as discussed earlier within the initial Florida update in 2003 penalised YMYL websites due to containing hypersensitive information, therefore Google would expect the information authoritative sources to deliver reliable information to their users.

Removing poor quality content

In alignment with the E-A-T guidelines, it is recommended to remove or to optimise poor quality content from your website. Poor quality content can involve;

- Content with a short word count, that is considered ‘thin’ that adds little or no value to the site

- Content that doesn’t have any or has low-quality links pointing towards the page

- Content that internally links or stuff keywords too many times, not providing additional quality to the user

- Content that is considered duplicated, appearing on more than one website with an exact or similar version

Increasing trust signals

In order to continue to improve your website’s E-A-T quality score, it is recommended to create or strengthen the ‘about us’ page, increasing the trustworthiness of your target audience. This is a great chance to display your credentials, your client portfolio and reviews. Not only this, but directly follows Google’s search quality guidelines from “popularity, user engagement, and user reviews can be considered evidence of reputation” as well as, creating a positive reputation of the business and the expertise of the site.

To enhance these trust signals further, add relevant schema structured data markup into the HTML code, providing Google and users with supplementary information. This can also help to appear within rich snippets on the SERPs with relevant content, without necessarily ranking on the first page.

Keep your site secure

An integral factor of the E-A-T guidelines is the security of users using this search engine. If you have been hit by an Algorithm update and haven’t as of yet, secure yourself and your users by getting an SSL certificate. This is particularly important if you’re collecting any sensitive information from your users. Not only this, but new and existing users visitors coming to your site are likely to be wary and less likely to convert if you do not have this security indicator in place.

Page speed

As discussed earlier, there has been evidence of traffic increases for websites that have focused on their page speed. As Google indicates site and page speed is one of the ultimate signals for ranking used by their algorithm, focus your efforts on decreasing the time it takes to load a page.

Actionable ways to increase your page speed include; file compression or your code and image files, leverage browser caching, improve your service response time (ideally below 200ms) and reducing the length of redirect chains to decrease user and crawler agent response time.

Further Industry Updates

Google stopped using the Rel=prev/next Indexing signal

Last month Google announced that they were no longer recognising rel=prev/next as an indexing signal. The news caught many web publishers off guard, as they had previously been using the indexing signal to help Google’s crawlers better understand the relationship between component URLs in a paginated series, as seen in many e-commerce sites.

Google announced that they had quietly stopped recognising rel=prev/next a few years ago while they worked out a better way to deal with paginated series. They now claim that their Google bots are now perfectly able to understand multi-part pages without the indexing signal.

What does this mean for SEOs?

While Google has announced that they no longer require rel=prev/next to be implemented on websites, webmasters do not need to remove the indexing signal from their sites. There are several reasons for this:

Firstly, having rel=prev/next will not harm the performance of the site, meaning that any resource allocated to removing it would be better spent elsewhere. For example, Google’s decision not to use rel=prev/next means that a website’s internal linking structure is now more important than ever, so spending time on optimising this is a good strategy.

Secondly, Bing and other search engines are still using the indexing signal for their own algorithms. Removing rel=prev/next would impact their ability to deal with a website’s paginated series, so if your website receives high volumes of traffic from other search engines removing it could have a negative impact.

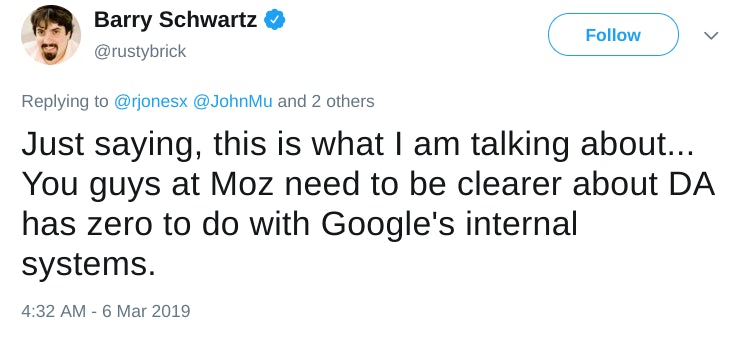

Moz Domain Authority 2.0

This isn’t a Google algorithm update. Nor does Moz’s Domain Authority (DA) cause search rankings to increase/decrease. However, there was so much hype around the subject in SEO Twitter that we had to include it in our March round-up.

On March 5th, Moz rolled out their first major update to Domain Authority in years. It’s a metric that many SEOs, PRs, clients and stakeholders rely on. So when people saw their score drop – SEO Twitter had a lot to say.

Moz implores that their new DA score incorporates Spam Score and link quality patterns into a machine-learned model set to keep up with Google’s algorithm. The DA score update is also largely influenced by their new and much larger link index. Moz have argued that Domain Authority predicts the likelihood that one domain will outrank another.

If you have seen a drop in DA score, Moz reason that your competitors will also have. For SEO consultants and agencies, this means that link-building will become even more challenging. Obtaining high DA links will become more demanding. And if you choose to dabble in the black hat world of ‘link-sellers’ and private blog networks (PBNs), you might find that their inventories or networks have disappeared overnight.

What does this mean for SEOs?

We can assume that Domain Authority will be incredibly agile in comparison to it’s prior static state. Moz state that updates to DA will be in turn with algorithmic changes from Google, so we can expect DA scores to fluctuate from time to time.

If you use DA, you should always compare changes in your score with your competitors. If there are significantly more increases/decreases, it could demonstrate positive results from your previous link-building efforts or indicate that your competitors are doing something special.

It would also be wise to compare your DA over time. DA 2.0 will now update itself historically, so you can track changes. If you find that your DA has decreased over time, in comparison to your competitors, it is a sign for you to pay closer attention to outreach efforts.

Conclusion

You might have noticed our new traffic light imagery in this post. As you might have already guessed, it is our new way of determining updates which are of serious importance and might need to be reacted to ASAP (red), important updates that need to be considered when creating new strategies (amber), and minor updates (green) that will have little-to-no effect on your rankings that will require actioning to regulate.

As always, it is beneficial to keep an eye on your rankings during major algorithm updates such as Florida 2 and make any changes 10-15 days after any significant fluctuations. This will help SEOs determine whether any changes in ranking can be attributed to the algorithm update or alterations on the site.

We know that a lot of SEOs, marketers and bloggers alike use DA to qualify their sites. In turn, a hit to your score is an unwelcome surprise. Explaining to your clients or managers that an update is the reason for their Domain Authority to rapidly decline isn’t the easiest task. With this in mind, it’s best to compare any fluctuations in DA with your competitors to determine whether the industry they operate in has been affected too.

Have we missed anything out? Let us know in the comments.

Read further algorithmic updates from Liv, Hugo and Georgie or contact the team today.